- Superpower Daily

- Posts

- Santa Mode & Video in Advanced Voice

Santa Mode & Video in Advanced Voice

Google built an AI tool that can do research for you

In today’s email:

☠️ ChatGPT and Sora experienced a major outage

👁️ AI Godmother Fei-Fei Li Has a Vision for Computer Vision

🤖 Google unveils Project Mariner: AI agents to use the web for you

🧰 9 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

OpenAI has introduced an exciting new feature: video and screen sharing in Advanced Voice Mode for ChatGPT. This update lets users bring live video and screen sharing into their conversations, enhancing interactions with real-time visuals. To use the feature, simply click the video button in Advanced Voice Mode. It’s perfect for activities like troubleshooting, teaching, or sharing content. For example, you can ask ChatGPT to guide you through making pour-over coffee or get assistance with crafting the perfect reply to a message by sharing your screen.

In addition to video, ChatGPT now includes a seasonal feature: conversations with Santa Claus! Users can interact with Santa in his signature jolly voice, asking about his favorite traditions, reindeer, or even beard care tips. This feature, accessible via a snowflake icon or the settings page, is designed for festive fun. Santa is available globally wherever voice mode is supported, and the first conversation with him comes with a reset on Advanced Voice usage limits, ensuring everyone gets a chance to chat without restrictions.

These features are rolling out across mobile, desktop, and web platforms starting today, with availability for Plus, Pro, and Enterprise users varying by region and plan. This update makes ChatGPT more interactive and versatile, whether through live video, festive chats with Santa, or screen-sharing for collaboration. OpenAI is thrilled to see how users leverage these capabilities during the holiday season and beyond.

The future of presentations, powered by AI

Gamma’s AI creates beautiful presentations, websites, and more. No design or coding skills required. Try it free today.

Fei-Fei Li, a Stanford University professor and AI pioneer, is pushing the boundaries of computer vision with her new startup, World Labs. Known for her pivotal role in creating the ImageNet dataset, which revolutionized AI object recognition, Li now focuses on equipping AI with "spatial intelligence." Her work emphasizes the critical importance of understanding the 3D nature of the world for AI systems, enabling machines to generate, interact with, and reason within three-dimensional environments. At the recent NeurIPS conference, she described this effort as "ascending the ladder of visual intelligence," highlighting the evolutionary interplay between perception and action that propels intelligence forward.

World Labs is creating immersive 3D scenes where objects maintain permanence and comply with physics, a leap beyond current 2D and video-generation tools. These advancements hinge on significant computational resources, which Li acknowledges as a challenge. She advocates for greater public-sector access to computing power, emphasizing that technological tools like AI and data are modern equivalents of historical innovations such as telescopes and microscopes. Through her work with Stanford's Institute for Human-Centered AI (HAI), Li has been advocating for initiatives like the National AI Research Resource (NAIRR) to democratize access to AI technology for broader innovation.

Li envisions transformative applications for 3D spatial intelligence, from designing homes and navigating medical imaging to advancing robotics and augmented reality (AR). She imagines AI-enabled tools that guide users through practical tasks, such as repairing a car or exploring national parks with AR glasses that provide contextual information. Believing the rapid pace of technological advancement will make these possibilities a reality in our lifetime, she sees spatial intelligence as a cornerstone for unlocking creativity, productivity, and practical solutions across diverse fields.

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25784204/deep_research.gif)

Google has introduced Deep Research, an AI-powered tool available through its Gemini bot, designed to streamline online research. Exclusively offered to Gemini Advanced subscribers, the tool enables users to request detailed reports on specific topics. By creating a customizable "multi-step research plan," Gemini searches for relevant information online, conducts related follow-ups, and produces a comprehensive summary of its findings, complete with source links. Users can refine the report, delve deeper into specific areas, or export it to Google Docs for further use.

This feature, part of Google’s broader Gemini 2.0 launch, highlights its focus on "agentic AI"—AI systems capable of performing autonomous tasks. Deep Research echoes similar tools, like Perplexity’s Pages, that generate tailored outputs based on prompts. Google’s announcement also included the release of Gemini Flash 2.0, an upgraded chatbot optimized for speed, now available to developers. These advancements signal Google’s commitment to developing tools that enhance productivity and simplify complex tasks.

Currently, Deep Research is accessible only in English via the Gemini web interface, where users must select "Gemini 1.5 Pro with Deep Research" from the model dropdown. As AI tools become increasingly versatile, Google’s move positions Gemini as a leader in creating solutions that integrate search, synthesis, and reporting into a single seamless experience.

Related:

- Google unveils Project Mariner: AI agents to use the web for you

- Google unveils AI coding assistant ‘Jules,’ promising autonomous bug fixes and faster development cycles

Other stuff

ChatGPT and Sora experienced a major outage

Meta releases AI model to enhance Metaverse experience

Meta debuts a tool for watermarking AI-generated videos

Character·AI has retrained its chatbots to stop chatting up teens

Cartesia claims its AI is efficient enough to run pretty much anywhere

It sure looks like OpenAI trained Sora on game content

Microsoft just announced Copilot Vision which is now in preview

All your ChatGPT images in one place 🎉

You can now search for images, see their prompts, and download all images in one place.

TwelveLabs - Multimodal AI that understands videos like humans

Deta Surf - A browser, file manager, and AI assistant in one clean app

Bricks - The AI Spreadsheet We’ve All Been Waiting For

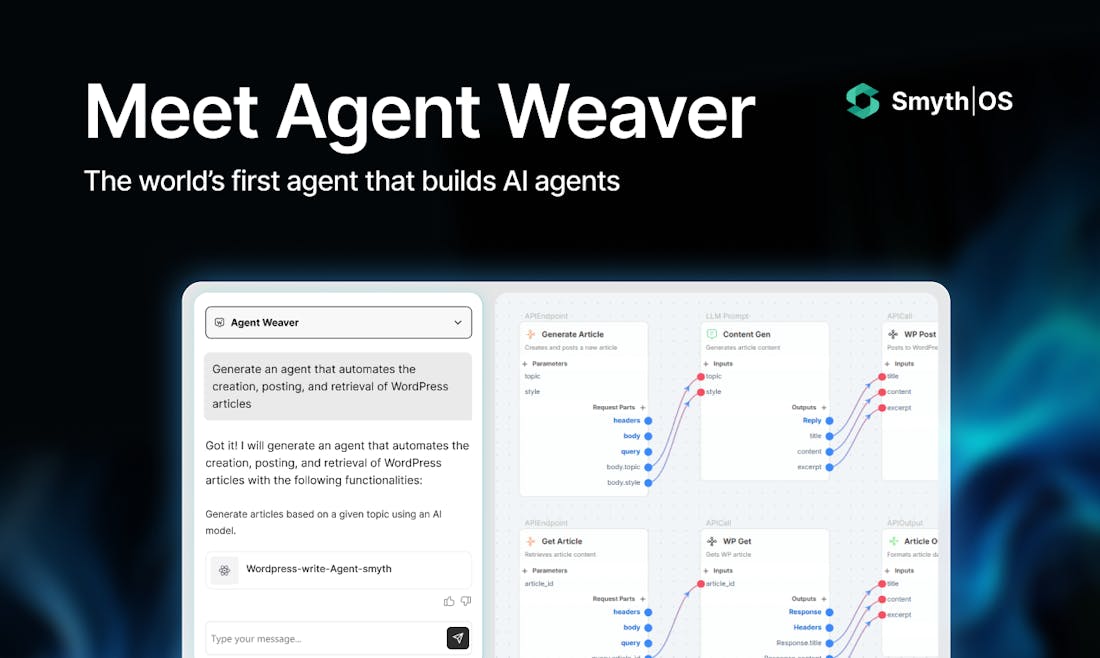

SmythOS - Build, debug, and deploy AI agents in minutes

AI Santa by Tavus - Video chat with Santa, anywhere anytime

AISmartCube - Build AI tools like you're playing with Legos

Remention - Place your product in billions of online discussions with AI

Shortcut - Your AI partner that works at the speed of voice

RODCast - Your favorite Reddit threads as podcasts

Unclassified 🌀

WFH Team - Work from anywhere in the world

This isn’t traditional business news

Welcome to Morning Brew—the free newsletter designed to keep you in the know on the business news impacting your career, company, and life—in a way you didn’t know you needed.

Note: this isn’t traditional business news. Morning Brew’s approach cuts through the noise and bore of classic business media, opting for short writeups, witty jokes, and above all—presenting the facts.

Save time, actually enjoy business news, and join over 4 million professionals reading daily.

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.

OR