- Superpower Daily

- Posts

- Introducing OpenAI o1-preview

Introducing OpenAI o1-preview

‘He surprised me’: Oprah on her new AI special with Sam Altman

In today’s email:

☠️ AI chatbots might be better at swaying conspiracy theorists than humans

👨🏻💻 One of the best ways to get value for AI coding tools: generating tests

🦾 Face-to-face with Figure’s new humanoid robot

🧰 11 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

A new series of reasoning models for solving hard problems. Available starting 9.12

OpenAI has introduced its latest generative AI model, code-named Strawberry and officially named o1. This new model family includes two variants: o1-preview and o1-mini, the latter being a more efficient version tailored for code generation. Available starting Thursday to ChatGPT Plus and Team subscribers, with enterprise and educational users gaining access early the following week, o1 offers enhanced capabilities but comes with certain limitations. Unlike its predecessor, GPT-4o, o1 currently lacks web browsing and file analysis features, and its image analysis capabilities are temporarily disabled pending further testing. Additionally, o1 is subject to rate limits, allowing 30 messages per week for o1-preview and 50 for o1-mini, and it is priced significantly higher than previous models, costing $15 per million input tokens and $60 per million output tokens via the API.

One of o1’s standout features is its ability to fact-check itself through an advanced chain of reasoning. This capability allows the model to spend more time deliberating on each query, enabling it to handle complex tasks that require synthesizing multiple subtasks. OpenAI’s research scientist Noam Brown explained that o1 is trained with reinforcement learning, encouraging the model to “think” before responding by using a private chain of thought and optimizing its reasoning with specialized datasets. Early feedback from users like Pablo Arredondo of Thomson Reuters highlights o1’s superior performance in areas such as legal analysis and problem-solving in LSAT logic games, as well as impressive results in mathematical competitions and programming challenges.

Despite its advancements, o1 is not without drawbacks. Users have reported that the model can be slower in generating responses, sometimes taking over ten seconds for certain queries. Additionally, there are concerns about increased instances of hallucinations, where o1 may confidently provide incorrect information and is less likely to admit uncertainty compared to GPT-4o. These issues indicate that while o1 represents a significant step forward in AI reasoning and factual accuracy, it still requires further refinement to achieve flawless performance.

OpenAI faces stiff competition from other AI developers like Google DeepMind, who are also enhancing model reasoning capabilities through increased compute time and guided responses. To maintain a competitive edge, OpenAI has chosen to keep o1’s raw chains of thought private, opting instead to display summarized versions. The true test for o1 will be its widespread availability and cost-effectiveness, as well as OpenAI’s ability to continue innovating and improving the model’s reasoning abilities over time. As the AI landscape evolves, o1 sets a high benchmark for future developments in self-factoring generative models.

Related:

- Notes on OpenAI’s new o1 chain-of-thought models

- A review of OpenAI o1 and how we evaluate coding agents

- Just used OpenAI o1 to create a 3D version of Snake in under a minute!

- Some of our researchers behind OpenAI o1 🍓

Automate Phone Calls with Synthflow AI

Always-on AI voice assistants to automate your calls.

Book appointments, transfer calls, and extract valuable info.

Integrates with your CRM, easy setup, no coding required.

Belief in conspiracy theories is widespread, especially in the United States, where up to half of the population may subscribe to at least one outlandish claim. Traditionally, these beliefs have been resistant to debunking, with individuals often doubling down when confronted with factual evidence due to motivated reasoning. However, a recent study published in Science challenges this notion by demonstrating that AI chatbots can effectively reduce the strength of conspiracy beliefs.

The research, led by psychologist Gordon Pennycook from Cornell University, involved 2,190 participants who held various conspiracy beliefs. These individuals engaged in personalized conversations with an AI chatbot powered by GPT-4 Turbo. The chatbot provided tailored, evidence-based counterarguments specific to each participant’s unique conspiracy theory. The responses were meticulously fact-checked, ensuring high accuracy. The interactions, averaging eight minutes, resulted in a significant 20% decrease in conspiracy beliefs, a reduction that persisted even two months later.

Co-author Thomas Costello highlighted that the chatbot’s ability to customize debunking efforts was crucial, as conspiracy theories are highly heterogeneous and vary greatly from person to person. This tailored approach contrasts with traditional broad debunking methods, which often fail to address the specific nuances of individual beliefs. Additionally, the study found that the chatbot not only diminished specific conspiracy beliefs but also had spillover effects, making participants less generally conspiratorial and more inclined to reject misinformation on social media.

Despite its success, the chatbot was less effective in addressing emerging conspiracy theories, such as those surrounding a recent assassination attempt on former President Donald Trump, due to the limited availability of factual information in the immediate aftermath of such events. Nevertheless, the study suggests that AI chatbots hold significant promise in combating misinformation by providing precise, evidence-based counterarguments, thereby offering a powerful tool to mitigate the spread and persistence of conspiracy theories.

Oprah Winfrey has launched a new ABC special titled “AI and the Future of Us,” which features an in-depth interview with Sam Altman, CEO of OpenAI. The special aims to demystify artificial intelligence for the general public by bringing together experts from various fields, including technology, government, and the humanities. Oprah sought to create a platform where complex AI topics could be explained in simple terms, ensuring that viewers gain a clear understanding of both the potential benefits and the inherent risks associated with AI advancements.

In the interview, Oprah expresses her initial concerns about AI, highlighting issues such as misinformation, deepfakes, and the potential for enhanced cyberattacks. She emphasizes the importance of developing a “suspicion muscle” to navigate the challenges posed by AI-generated content, advocating for a balanced approach that neither succumbs to panic nor falls into apathy. Oprah shares her skepticism about the current regulatory frameworks, fearing that lawmakers may lag behind the rapid developments in AI technology, much like they did with previous technological advancements.

Despite her concerns, Oprah also acknowledges the positive impacts AI can have, particularly in education and healthcare. She envisions a future where every child has access to personalized tutoring and where medical professionals can spend more quality time with patients, free from clerical burdens. Her collaboration with Sam Altman and other key figures underscores her commitment to fostering a nuanced conversation about AI, one that recognizes its transformative potential while addressing its societal implications.

Throughout the production of the special, Oprah herself has become more engaged with AI, transitioning from skepticism to regular use. She admits to initially avoiding AI tools but has since integrated them into her daily life, finding practical applications such as planning travel accommodations. This personal shift reflects her broader message in the special: AI is an inevitable part of the future, and proactive engagement is essential for harnessing its benefits while mitigating its risks. Oprah hopes that the special will serve as an alert to both the public and policymakers, encouraging informed and thoughtful discourse on the trajectory of artificial intelligence.

Other stuff

HeyGen introduced Avatar 3.0!

Salesforce unleashes its first AI agents

One of the best ways to get value for AI coding tools: generating tests

Hasbro’s CEO Thinks D&D‘s Adoption of AI Is Inevitable

Face to face with Figure’s new humanoid robot

Mistral releases Pixtral 12B, its first multimodal model

All your ChatGPT images in one place 🎉

You can now search for images, see their prompts, and download all images in one place.

PaperQA2, the first AI agent that conducts entire scientific literature reviews on its own

Shopsense AI lets music fans buy dupes inspired by red-carpet looks at the VMAs

Fish Speech - Open-source Text to Speech model

Command AI - AI for your users

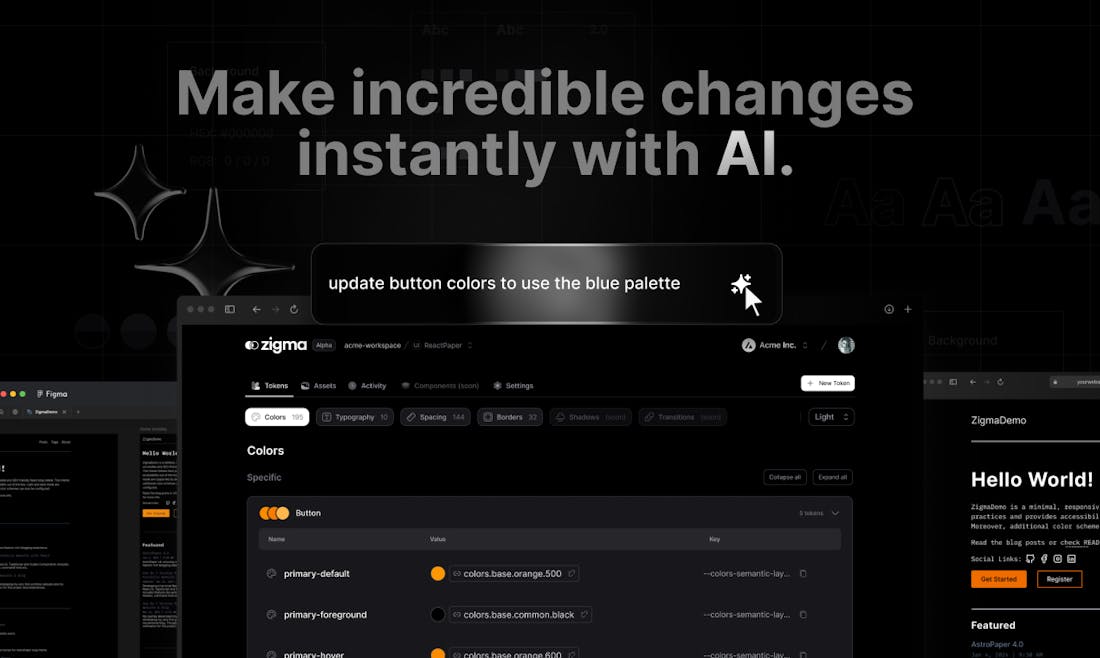

Zigma by NextUI (YC S24) - Connect your design files to production code in minutes

Sparky - Guided journaling and reflection

Fujiyama - Practice natural Japanese conversation in real-time with AI

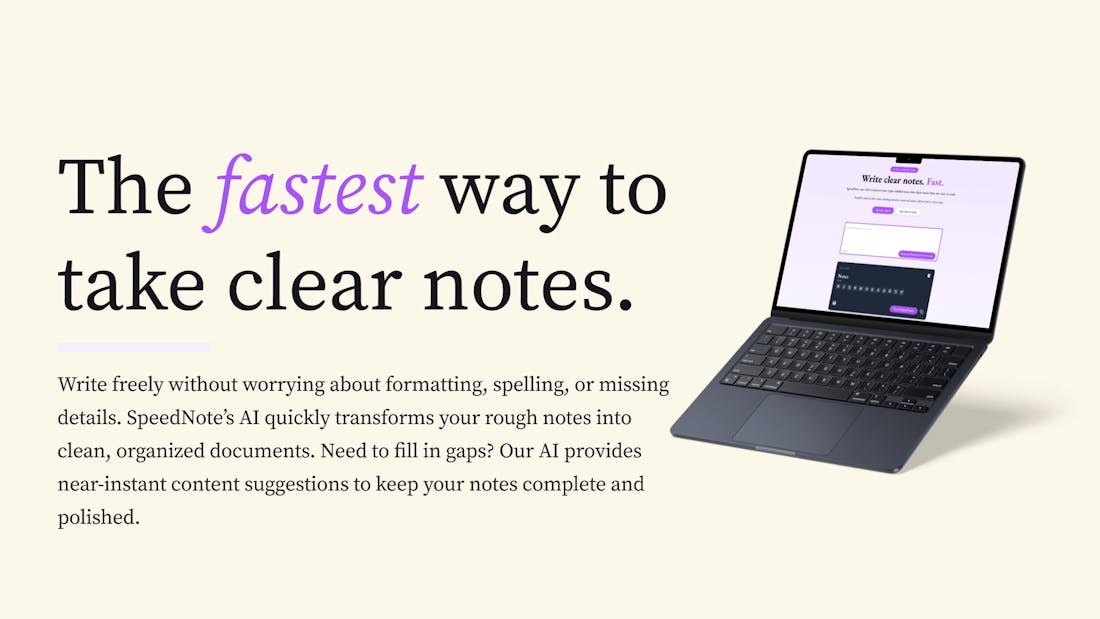

SpeedNote AI - Convert your typo-riddled mess into clear notes

Serra - Find the best talent with AI

Patched - Open-source workflow automation for development teams

Fit.AI - Ultra personalized fitness

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.

OR