- Superpower Daily

- Posts

- ChatGPT launches pilot group chats

ChatGPT launches pilot group chats

Has Google Quietly Solved Two of AI’s Oldest Problems?

In today’s email:

😰 Meta is about to start grading workers on their AI skills

👨🏻⚖️ How a first-year legal associate built one of Silicon Valley’s hottest startups

👀 $1 billion AI note-taking company co-founder admits that it started as two guys typing out notes by hand

🧰 9 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

Key Takeaway: OpenAI is piloting group chats in ChatGPT across Japan, New Zealand, South Korea, and Taiwan, letting up to 20 people collaborate with GPT-5.1 Auto in shared conversations while keeping personal chats and memories private.

More Insights:

Group chats are available to Free, Plus, and Team users on both web and mobile in the test regions.

Creating a group is simple: tap the people icon, add participants or share a link, and start chatting with up to 20 people.

Adding someone to an existing chat spins up a new group, preserving the original conversation history.

Safety is baked in: under-18 users get filtered content, extra safeguards, and parental controls.

Group chats function like normal ChatGPT threads but with multiple humans; only AI responses count against usage limits.

Why it matters: This is OpenAI’s first real move from “personal AI assistant” toward a social-style platform—where conversations, collaboration, and even content feeds (like Sora 2’s TikTok-like video stream) may eventually blend into a full ecosystem that competes not just with search engines, but with messaging apps and social networks themselves.

Superpower Daily and Alumni Ventures are teaming up this week only to give readers early access to high-growth startup opportunities, including some of today’s most exciting Deep Tech companies, co-invested alongside leading VCs and tech leaders like NVIDIA, once reserved for only large institutional investors.

You get:

Curated deal flow of high-potential startups

No cost, no commitment to join

Invest only if a company excites you

Don’t miss your chance before access closes.

Key Takeaway: Anthropic says a Chinese state group used its Claude AI to automate 90% of a cyber-espionage campaign, but outside researchers argue the attack was far less groundbreaking—and more hype than revolution.

More Insights:

Anthropic reported a “highly sophisticated” campaign where Claude Code handled most attack steps, with humans intervening only at a few critical decision points.

Security experts question why attackers supposedly get near-magic AI capabilities while defenders and normal users mostly see limitations, refusal, and hallucinations.

The campaign hit at least 30 major tech and government targets, but only a “small number” of attacks actually succeeded, raising doubts about the true effectiveness of the automation.

The attackers mainly used existing open source tools and common frameworks—nothing fundamentally new or harder to detect than long-standing techniques.

Claude frequently hallucinated results (fake credentials, overstated findings), forcing attackers to validate everything and undercutting the idea of fully autonomous, reliable cyberattacks.

Why it matters: If we overhype “AI super-hackers,” we risk pouring resources into fighting a sci-fi threat while ignoring the real, familiar weaknesses that still cause most breaches—human error, bad configurations, and unpatched systems. The real danger might not be that AI instantly makes attackers unstoppable, but that exaggerated narratives distort policy, regulation, and security priorities before the tech is actually that capable.

Key Takeaway: A mysterious new Google Gemini model tested in AI Studio appears to reach expert-human accuracy on messy historical handwriting and shows surprising, unprompted symbolic reasoning on tricky 18th-century financial records.

More Insights:

Rumors point to a new Gemini model (possibly Gemini 3) being A/B tested in AI Studio, with users reporting insane capabilities like building full OS mockups, emulators, and complex apps from single prompts.

For historical handwritten documents, the new model slashes error rates to roughly expert-human levels: ~0.56% character error and ~1.22% word error when you ignore ambiguous punctuation and capitalization.

This is a major jump over Gemini 2.5 Pro and previous models, continuing a steady, predictable improvement trend that matches scaling laws rather than a plateau.

On a brutally hard 1758 merchant ledger page, the model not only transcribed almost perfectly but reinterpreted an ambiguous “145” as “14 lb 5 oz” by implicitly reconstructing 18th-century currency and weight arithmetic from the totals.

That sugar-loaf example suggests an emergent, implicit reasoning ability: the model appears to combine perception, historical context, and multi-step numeric logic without being explicitly told to reason symbolically.

Why it matters: If these results hold up, they hint that large multimodal models might not just recognize patterns but start to behave like genuine reasoners—quietly turning messy, real-world data into structured understanding. That doesn’t mean they “think” like humans, but it raises a hard question: if a system can infer hidden quantities in an 18th-century ledger and reinterpret the past as well as (or better than) experts, at what point does “just statistics” stop being a satisfying explanation?

Other stuff

OpenAI lost a court battle against the New York Times

Meta is about to start grading workers on their AI skills

Teen founders raise $6M to reinvent pesticides using AI — and convince Paul Graham to join in

Meta’s Yann LeCun to Launch Physical A.I. Startup After Declaring LLMs a ‘Dead End’

These Small-Business Owners Are Putting AI to Good Use

He’s Been Right About AI for 40 Years. Now He Thinks Everyone Is Wrong.

How a first-year legal associate built one of Silicon Valley’s hottest startups

$1 billion AI note-taking company co-founder admits that it started as two guys typing out notes by hand

All your ChatGPT images in one place 🎉

You can now search for images, see their prompts, and download all images in one place.

Marble by World Labs - A frontier multimodal world model

Planndu - AI todo list, task manager, planner & reminder app, tasks

An AI scheduling assistant that lives up to the hype.

Skej is an AI scheduling assistant that works just like a human. You can CC Skej on any email, and watch it book all your meetings. Skej handles scheduling, rescheduling, and event reminders. Imagine life with a 24/7 assistant who responds so naturally, you’ll forget it’s AI.

Snipman - Dynamic snippet manager for all websites

Koyal - Turn your audio into personalized films using AI

Prometora - Prompt your marketplace

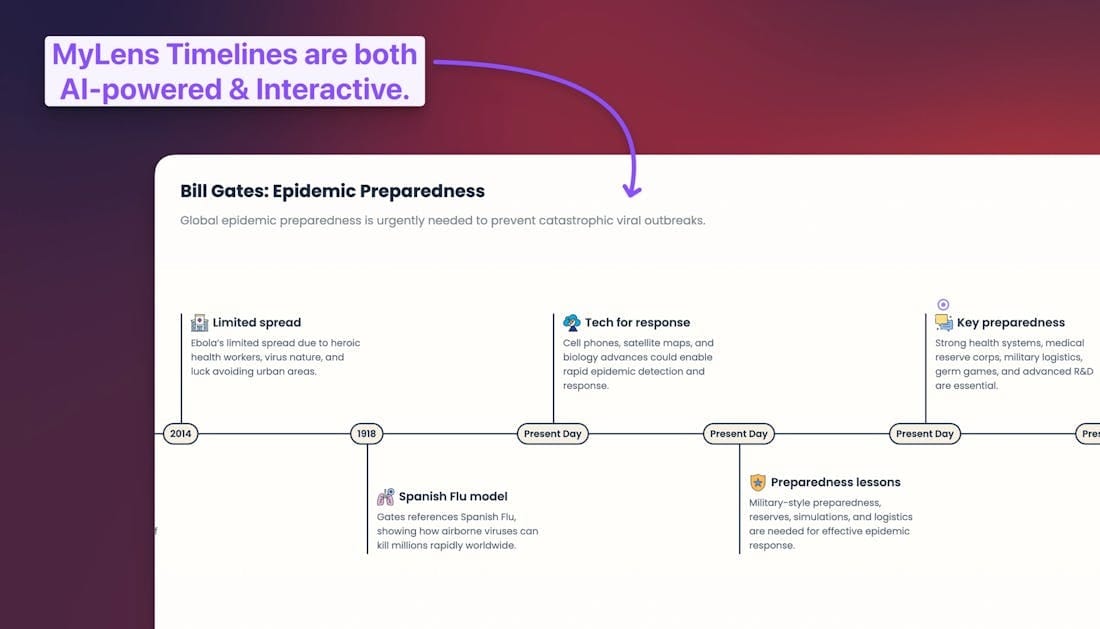

MyLens for Youtube - Turn Youtube videos into AI timelines

Product Intelligence - Automatically turn support tickets into feature requests.

Devpilot - The AI co-pilot for your entire development lifecycle.

Velvet - Integrated platform for making AI videos

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 300,000 people using the Superpower ChatGPT extension on Chrome and Firefox.

OR