- Superpower Daily

- Posts

- OpenAI Develops System to Track Progress Toward AGI

OpenAI Develops System to Track Progress Toward AGI

First-ever 'Miss AI' competition winner just announced

In today’s email:

👨🏻🦯 ‘Visual’ AI models might not see anything at all

🤖 Watch a robot navigate the Google DeepMind offices using Gemini

🤑 Marc Andreessen just sent $50,000 to an AI agent

🧰 8 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

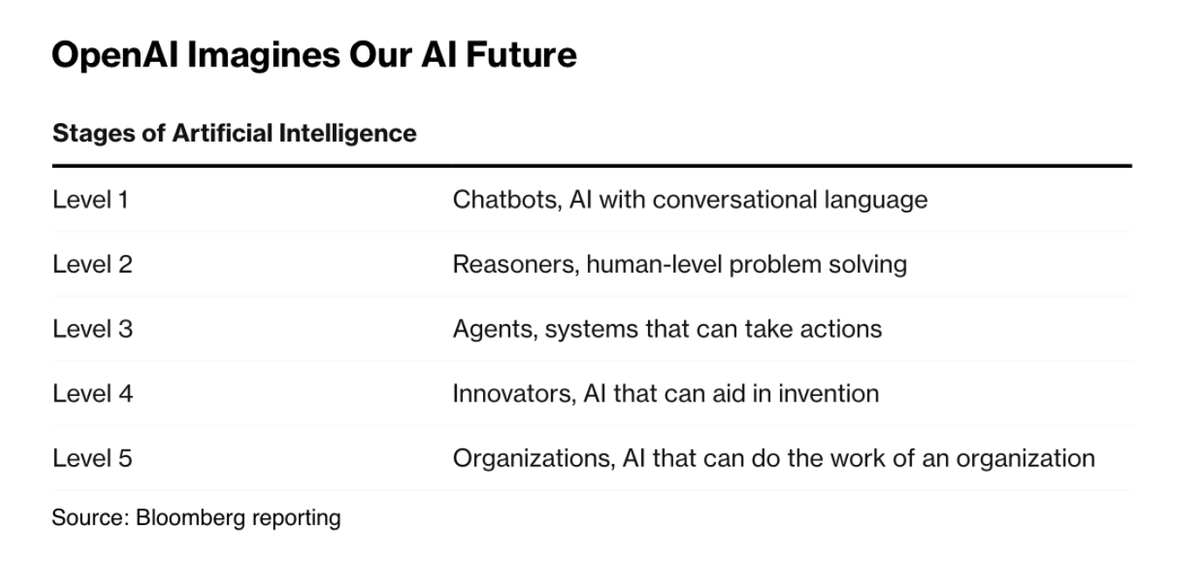

OpenAI has introduced a five-level system to track its progress toward achieving artificial general intelligence (AGI), aiming to help the public understand its advancements in AI safety and future potential. Currently at Level 1, where AI can engage in conversational language, OpenAI believes it is on the verge of reaching Level 2, known as “Reasoners.” This next tier represents AI systems capable of performing basic problem-solving tasks on par with a human holding a doctorate-level education without any tools. During a recent all-hands meeting, OpenAI executives demonstrated new research with the GPT-4 model showcasing emerging human-like reasoning skills.

The company, a leader in developing advanced AI technologies, has long been working towards AGI, which CEO Sam Altman predicts could be achieved within this decade. The new classification system, shared with employees and soon to be presented to investors, outlines a structured path from conversational AI to systems that can operate an organization (Level 5). OpenAI's approach is similar to frameworks proposed by other AI researchers, like those from Google DeepMind, who also advocate for a tiered understanding of AI development, including stages such as “expert” and “superhuman.”

OpenAI’s five levels start with the current state of conversational AI (Level 1) and progress to “Reasoners” (Level 2), “Agents” that can autonomously act for days (Level 3), AI that can generate innovations (Level 4), and ultimately, “Organizations” (Level 5), representing AI capable of managing entire enterprises. This system is still under refinement, with OpenAI seeking feedback from employees, investors, and board members to possibly adjust the tiers. This initiative reflects OpenAI’s ongoing efforts to transparently communicate its milestones and future goals in AI development.

The first "Miss AI" contest, organized by the influencer platform Fanvue, has sparked controversy for promoting unrealistic beauty standards. The contest crowned Kenza Layli, a fictional AI-generated Moroccan Instagram influencer, as the winner, which has drawn criticism from women in the AI industry. Critics argue that the contest objectifies women and sets a dangerous precedent by idealizing AI-generated images of women. Dr. Sasha Luccioni, an AI researcher, expressed disappointment, highlighting the lack of gender diversity in the field and the objectification of women through AI.

The contest, part of the World AI Creator Awards (WAICAS), featured three AI-generated winners judged on beauty, technical skill, and social media clout. Kenza Layli, created by Myriam Bessa, has over 200,000 Instagram followers and promotes "diversity and inclusivity" in her acceptance speech. However, the contest has been criticized for blurring the lines between AI-generated characters and their creators, leading to confusion and concerns about reinforcing unrealistic beauty standards.

AI ethics researcher Dr. Margaret Mitchell questioned the contest's intent, noting that it seems to empower creators while actually reinforcing harmful beauty ideals. She emphasized the potential negative impact on young girls' self-images, drawing parallels to the issues caused by unrealistic portrayals of femininity in media like Barbie dolls. Mitchell warned that the trend could lead to increased body dysmorphia, eating disorders, and the use of unsafe beauty products among young teens.

The latest AI models, like GPT-4o and Gemini 1.5 Pro, are promoted as "multimodal," suggesting they understand images and audio alongside text. However, a recent study by Auburn University and the University of Alberta reveals these models don't "see" as humans do. Despite claims of "vision capabilities" and "visual understanding," the study showed that these models struggled with simple visual tasks, such as identifying overlapping shapes or counting objects. For example, GPT-4o was only 18% accurate at determining whether two circles were slightly overlapping.

The study found that the AI's visual processing is more about pattern matching than true visual comprehension. Tasks that are straightforward for humans, such as counting interlocking circles, posed significant challenges for these models. Their success rates varied widely depending on the number of shapes and whether similar images were present in their training data, like the Olympic Rings. This indicates that the models rely heavily on their training data rather than actual visual reasoning.

Despite these shortcomings, these AI models are not entirely ineffective. They excel in specific areas, such as recognizing human actions and everyday objects, where they perform with high accuracy. This research highlights the need for a nuanced understanding of AI capabilities, showing that while these models may not "see" in the human sense, they are still valuable for certain applications. However, it's crucial to recognize their limitations and not be misled by marketing claims of near-human visual understanding.

Generative AI has shown significant promise in robotics, enhancing natural language interactions, robot learning, no-code programming, and design. Google’s DeepMind Robotics team has showcased another promising application: navigation. In their paper titled “Mobility VLA: Multimodal Instruction Navigation with Long-Context VLMs and Topological Graphs,” they demonstrate how Google Gemini 1.5 Pro teaches robots to respond to commands and navigate an office space. They utilized Every Day Robots from a previous project to illustrate this technology.

In a series of videos, DeepMind employees use a smart assistant-style command, “OK, Robot,” to direct the system around the 9,000-square-foot office. In one video, a robot leads a person to a wall-sized whiteboard when asked to go somewhere to draw. In another, a different robot follows written directions on a whiteboard to navigate to a robotics testing area, showcasing its capability to understand and execute complex instructions confidently.

The robots were initially familiarized with the office space through a process called “Multimodal Instruction Navigation with demonstration Tours (MINT),” which involved guiding the robot around and pointing out landmarks. This was combined with hierarchical Vision-Language-Action (VLA) for environment understanding and reasoning. As a result, the robots could respond to various commands, including written and drawn instructions, as well as gestures, effectively navigating and performing tasks within the office environment.

Other stuff

Marc Andreessen just sent $50,000 to an account on X called ‘Truth Terminal’, a semi-autonomous AI agent, so it can pay humans to help it spread out in the wild 🔥🔥

China leads the world in adoption of generative AI, survey shows

French Startup Bioptimus Releases AI Model for Disease Diagnosis

The sperm whale 'phonetic alphabet' revealed by AI

The AI-focused COPIED Act would make removing digital watermarks illegal

How Apple Intelligence is changing the way you use Siri on your iPhone

Wow. This AI-generated McDonalds ad is actually good. 🔥🔥

Lynx - the leading hallucination detection model

Generative AI is Giving A Boost To The Creator Economy

Paper from Google: Controlling Space and Time with Diffusion Models

All your ChatGPT images in one place 🎉

You can now search for images, see their prompts, and download all images in one place.

Rendernet Introduce Video FaceSwap

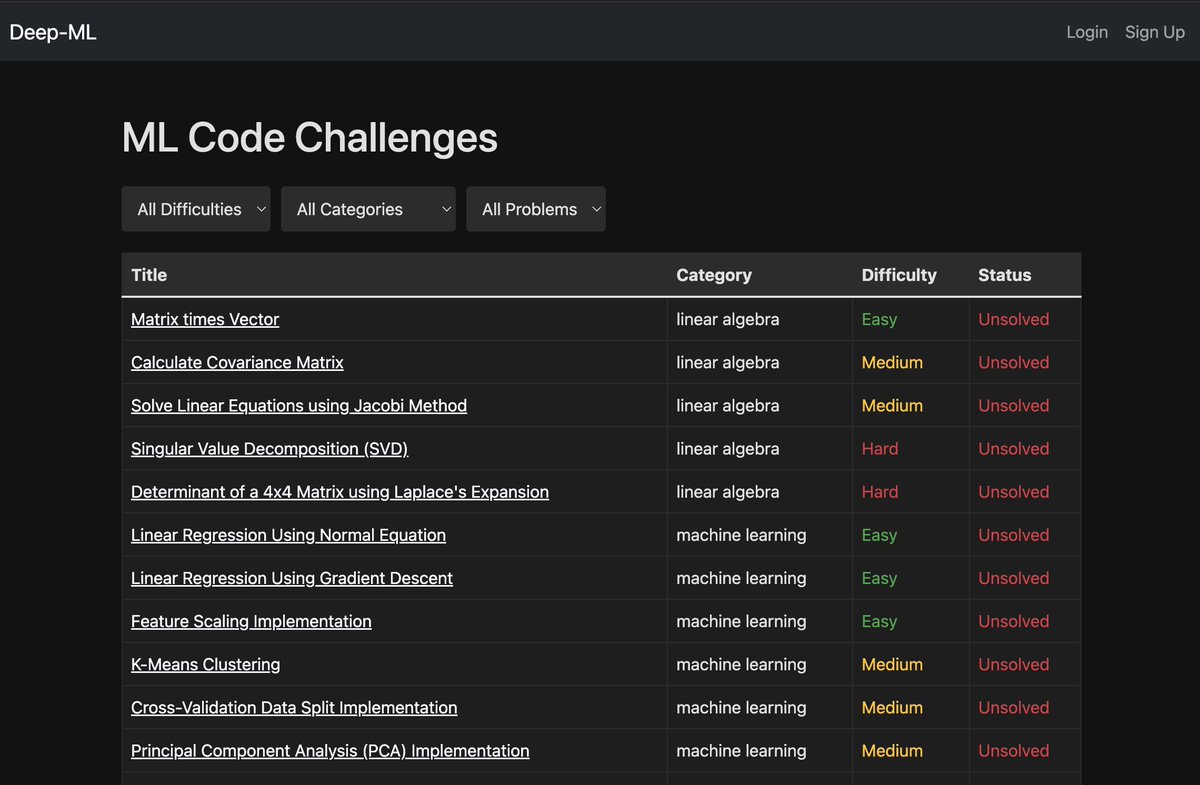

Deep ML - leetcode for machine learning

SmartCrawl - Turns any website into an API with AI (Waitlist)

CrazyFace - AI-Generated Crazy YouTube Faces

Prepare for your interviews with cipher

Buildots - AI-powered progress tracking that accurately measures site performance and reduces delays by up to 50%.

AiEditor - An open-source AI-powered rich text editor

AnyoneCanAI - A simpler & faster way of creating valuable products with AI

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.

OR