- Superpower Daily

- Posts

- OpenAI funds new AI healthcare venture

OpenAI funds new AI healthcare venture

The moment we stopped understanding AI

In today’s email:

🤑 AI models that cost $1 billion to train are underway

📚 A mathematician's introduction to transformers and large language models

👀 Why So Many Bitcoin Mining Companies Are Pivoting to AI

🧰 8 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

The OpenAI Startup Fund and Thrive Global have partnered to launch Thrive AI Health, a new company dedicated to creating a hyper-personalized AI health coach. The mission is to democratize access to expert health coaching to improve health outcomes and address chronic diseases. Funded by the OpenAI Startup Fund, Thrive Global, and the Alice L. Walton Foundation, the company aims to leverage AI for behavior change across five key areas: sleep, food, fitness, stress management, and connection. DeCarlos Love, formerly of Google, will serve as CEO.

Thrive AI Health will utilize generative AI to provide personalized coaching, drawing on the latest peer-reviewed science, biometric data, and user preferences. The AI health coach will deliver insights, proactive coaching, and recommendations tailored to each user, supported by a unified health data platform with robust privacy and security measures. This initiative also includes partnerships with leading academic institutions like Stanford Medicine and the Rockefeller Neuroscience Institute to integrate the AI health coach into their communities.

Alice Walton and other leaders emphasize the potential of AI to enhance health and wellness accessibility. The AI health coach aims to reduce the prevalence of chronic diseases, which account for a significant portion of healthcare spending. By focusing on underserved communities, Thrive AI Health seeks to address health inequities and improve overall health outcomes.

Anthropic CEO Dario Amodei revealed in a recent podcast that the cost of training AI models is skyrocketing, with current models like ChatGPT-4 costing around $100 million to train. He anticipates that within the next three years, the cost could soar to between $10 billion and $100 billion. This rapid increase is driven by advancements in algorithms and hardware, with Amodei predicting that future AI models could surpass human capabilities in many tasks. He emphasized that the journey to artificial general intelligence (AGI) will be a gradual process, building on successive model improvements.

The demand for powerful hardware is a significant factor in the escalating costs. In 2023, training ChatGPT required over 30,000 GPUs, and companies like Nvidia are now producing AI chips costing up to $40,000 each. The trend is expected to continue, with data center GPU deliveries potentially increasing tenfold. High-profile companies like Elon Musk's ventures and partnerships between OpenAI and Microsoft are planning massive investments in AI infrastructure, highlighting the intense competition and rapid growth in the field.

However, the surge in AI development brings substantial challenges, particularly in power supply and infrastructure. The energy consumption of AI data centers is immense, with last year's GPU power usage equivalent to that of 1.3 million homes. As AI models become more sophisticated and hardware demands increase, there is a growing need for sustainable energy solutions. Companies like Microsoft are exploring options such as modular nuclear power to meet these needs, underscoring the broader societal and environmental implications of the AI revolution.

Meta AI researchers have introduced MobileLLM, a compact and efficient language model tailored for smartphones and other resource-limited devices. This innovation, detailed in a study published on June 27, 2024, by a team from Meta Reality Labs, PyTorch, and FAIR, focuses on models with fewer than 1 billion parameters, significantly smaller than models like GPT-4. The team, led by Chief AI Scientist Yann LeCun, implemented novel techniques such as embedding sharing, grouped-query attention, and block-wise weight-sharing to enhance performance.

MobileLLM's design prioritizes depth over width, leading to improvements of 2.7% to 4.3% on benchmark tasks compared to previous models of similar size. Remarkably, the 350 million parameter version of MobileLLM achieved accuracy comparable to the much larger 7 billion parameter LLaMA-2 model in specific applications. This indicates that smaller models can offer similar functionalities while consuming fewer computational resources, aligning with the trend towards more efficient and specialized AI designs.

While not yet available for public use, Meta has open-sourced the pre-training code for MobileLLM, encouraging further research and development. This advancement signifies a crucial step towards making sophisticated AI more accessible and sustainable, challenging the belief that effective language models must be vast. The potential applications for on-device AI capabilities are significant, though their full realization will depend on ongoing technological developments.

The activation Atlas provides a fascinating insight into the high-dimensional embedding spaces used by modern AI models, showcasing how they organize and interpret data. AlexNet, a groundbreaking model introduced in 2012, was the first to demonstrate the incredible potential of scaling up old AI ideas. It transformed the computer vision landscape by using convolutional neural networks to classify images from the ImageNet dataset. This model's ability to detect patterns and features through layers of computing blocks laid the groundwork for the sophisticated AI systems we see today, such as ChatGPT, developed by OpenAI.

ChatGPT operates through a series of Transformer blocks, each performing fixed matrix operations to process input data and generate output. When a user inputs text, ChatGPT breaks it down into words and word fragments, maps them to vectors, and passes them through multiple Transformer layers. These layers iteratively refine the data until a coherent response is produced. This process, repeated over 100 times in the latest versions, highlights the immense computational power and vast training data required to achieve such advanced language understanding and generation capabilities. Despite its complexity, the intelligence of these models is derived from simple, repetitive operations on large datasets.

AlexNet's significance extends beyond its immediate impact on image classification; it also provides a glimpse into how AI models learn and represent data in high-dimensional spaces. By visualizing activation maps and synthetic images, researchers could see how the model identified and processed features like edges, colors, and even faces. These insights into AlexNet's inner workings helped pave the way for understanding more complex models like ChatGPT. As AI continues to evolve, the principles of scaling and embedding spaces will likely remain central, with future breakthroughs potentially arising from even larger datasets and more sophisticated computational techniques.

Other stuff

San Francisco’s AI boom can’t stop real estate slide, as office vacancies reach new record

LivePortrait can animate an image from a video reference with incredible accuracy. 🔥

A mathematician's introduction to transformers and large language models 🔥

Why So Many Bitcoin Mining Companies Are Pivoting to AI 🔥

The New York Times Is Suing Openai — And Experimenting With It For Writing Headlines And Copy Editing

All your ChatGPT images in one place 🎉

You can now search for images, see their prompts, and download all images in one place.

Screen Pipe - AI that knows everything about your life.

Groq in VSCode: Explaining and Documenting Entire Files in Seconds! 🤩

Paird AI - Ultra-Fast Code Generation. Powered by Groq*

Sei - AI-powered Regulatory Compliance Platform

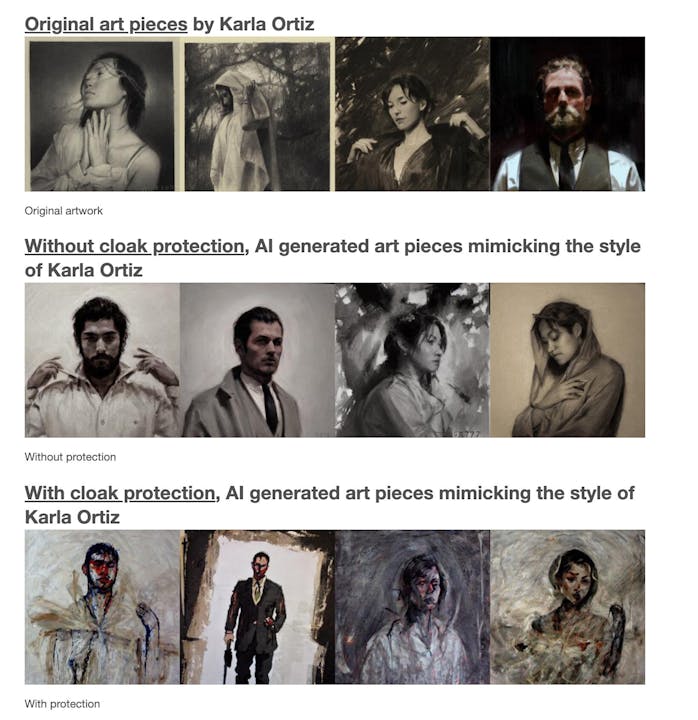

Glaze - Protect human artists by disrupting style mimicry

Micro Agent - An AI agent that writes (actually useful) code for you

Git2Log - AI Changelog Generator

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.

OR