- Superpower Daily

- Posts

- A Hacker Stole OpenAI Secrets 👀

A Hacker Stole OpenAI Secrets 👀

AI lie detectors are better than humans at spotting lies

In today’s email:

🏎️ New AI Training Technique Is Drastically Faster, Says Google

☠️ OpenAI’s ChatGPT Mac app was storing conversations in plain text

🔥 Cloudflare launches a tool to combat AI bots

🧰 11 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

Early last year, OpenAI experienced a security breach when a hacker accessed its internal messaging systems and stole details about the design of its AI technologies. The breach was discussed internally during an all-hands meeting in April 2023, and while the incident was not publicly disclosed due to the lack of customer information theft, it raised concerns among employees about potential foreign threats, particularly from China. The situation highlighted the challenges OpenAI faces in protecting its technological secrets without hindering the global collaboration necessary for AI advancement.

Leopold Aschenbrenner, a former technical program manager at OpenAI, raised concerns about the company's security measures, suggesting they were insufficient to prevent potential espionage by foreign governments. Despite his dismissal, which OpenAI claims was not related to his concerns about security, Aschenbrenner continues to speak publicly about the vulnerabilities he perceives within the company. OpenAI maintains that while it values security, it disagrees with Aschenbrenner’s portrayal of their protocols.

The broader context of AI security is also shaping discussions at other tech companies and in government circles. Companies like Meta are opting to make their AI technologies open source, believing this helps improve security through broader scrutiny. Meanwhile, national security experts and government officials are increasingly debating the need for stricter regulations on AI technologies to prevent potential misuse, reflecting growing concerns about the strategic implications of AI advancements globally.

Elon Musk’s social networking app, X, is planning to integrate xAI’s Grok AI more deeply into its platform. Independent app researcher Nima Owji discovered new features being developed, such as asking Grok about X accounts, using Grok by highlighting text, and accessing Grok’s chatbot via a pop-up on the screen. These features, resembling the AI chat integration seen in productivity apps by Google and Microsoft, aim to provide users with more seamless and frequent access to Grok while navigating X.

One of the notable developments includes the ability to research X user profiles and search for terms within posts by highlighting text and clicking an “Ask Grok” button. This integration could allow users to learn more about accounts and information encountered on X without leaving the app. Despite these advancements, X’s in-app purchase revenue has been trending down, with May 2024 seeing $7.6 million in net revenue, a decline from previous months. Factors such as competition from other social apps and the premium subscription price might be contributing to this downturn.

Moreover, X’s user base appears to be adjusting to the app’s rebranding from Twitter to X, which might be affecting its performance. In May, the app saw a 32% drop in downloads compared to the previous year, with only 3 million downloads on the App Store. The company is facing increased competition from apps like Instagram’s Threads, Mastodon, and new startups like Bluesky and noplace, which offer similar text-first social experiences. Despite the challenges, the integration of Grok AI aims to enhance user engagement and potentially drive subscription growth.

Google's DeepMind researchers have introduced a new AI training technique called Joint Example Selection Training (JEST), which dramatically accelerates the AI training process. This method can reduce the required iterations by up to 13 times and cut computational power usage by 10 times, making it a more energy-efficient option. This advancement is significant, as the AI industry is notorious for its high energy consumption, contributing to environmental concerns similar to those posed by cryptocurrency mining.

JEST improves AI training efficiency by selecting complementary data batches that enhance the model's learnability. Unlike traditional methods, which choose individual examples, JEST focuses on the entire data set's composition. This multimodal contrastive learning approach identifies dependencies between data points, enabling faster and more efficient training with less computing power. Google demonstrated that using pre-trained reference models to guide the data selection process ensures high-quality, well-curated datasets, optimizing the training process.

The study's experiments, particularly with the common WebLI dataset, showed significant performance gains, proving the effectiveness of JEST. This technique, known as "data quality bootstrapping," emphasizes quality over quantity, quickly identifying highly learnable sub-batches to speed up the training process. If scalable, JEST could allow AI trainers to develop more powerful models with the same resources or reduce resource consumption for new models, addressing the energy demands and environmental impact associated with AI development.

AI-based lie detectors are proving to be more effective than humans at spotting lies, as demonstrated in a recent study by Alicia von Schenk and her team at the University of Würzburg. They developed a tool using Google’s AI language model BERT, which was able to distinguish true from false statements 67% of the time, outperforming the typical human accuracy of around 50%. However, when people chose to use the AI tool, their reliance on its predictions led to a higher rate of accusations, raising concerns about the potential social impact of such technology.

While these tools could help identify misinformation on social media or uncover inaccuracies in job applications, their accuracy is still a concern. Even an AI system with 80% or 99% accuracy could lead to significant numbers of false accusations, undermining trust and social bonds. The study found that people were generally skeptical about using AI lie detectors, but those who did trust the technology tended to follow its predictions closely, which could further exacerbate issues of trust.

The historical fallibility of lie detection methods like the polygraph, which measures physiological responses to stress, highlights the risks of relying on imperfect technologies. Given the potential for widespread use and the ease of scaling AI lie detectors, von Schenk emphasizes the need for thorough testing to ensure these tools are substantially better than humans before they are widely adopted. Without rigorous testing and high accuracy standards, the use of AI lie detectors could do more harm than good by increasing false accusations and eroding trust in society.

Other stuff

OpenAI’s ChatGPT Mac app was storing conversations in plain text

CIOs’ concerns over generative AI echo those of the early days of cloud computing

The US intelligence community is embracing generative AI

Paper: No Need to Lift a Finger Anymore? Assessing the Quality of Code Generation by ChatGPT

Cloudflare launches a tool to combat AI bots

Workspaice: Human+AI, creating together

Meet Mercy and Anita – the African workers driving the AI revolution, for just over a dollar an hour

Tool preventing AI mimicry cracked; artists wonder what’s next

All your ChatGPT images in one place 🎉

You can now search for images, see their prompts, and download all images in one place.

Voice Isolator by ElevenLabs - Free voice isolator and background noise remover

Calypso - Your AI-First Public Equities Copilot

Archivist: AI Search for Code - Blazingly fast semantic search for your codebase

Pillser Ask - Ask anything about supplements - your personalized AI expert

TTSynth.com - Convert text to speech with natural voices free online

BuilderKit - Build and ship AI tools super fast

Suno - Make any song you can imagine, anytime & anywhere

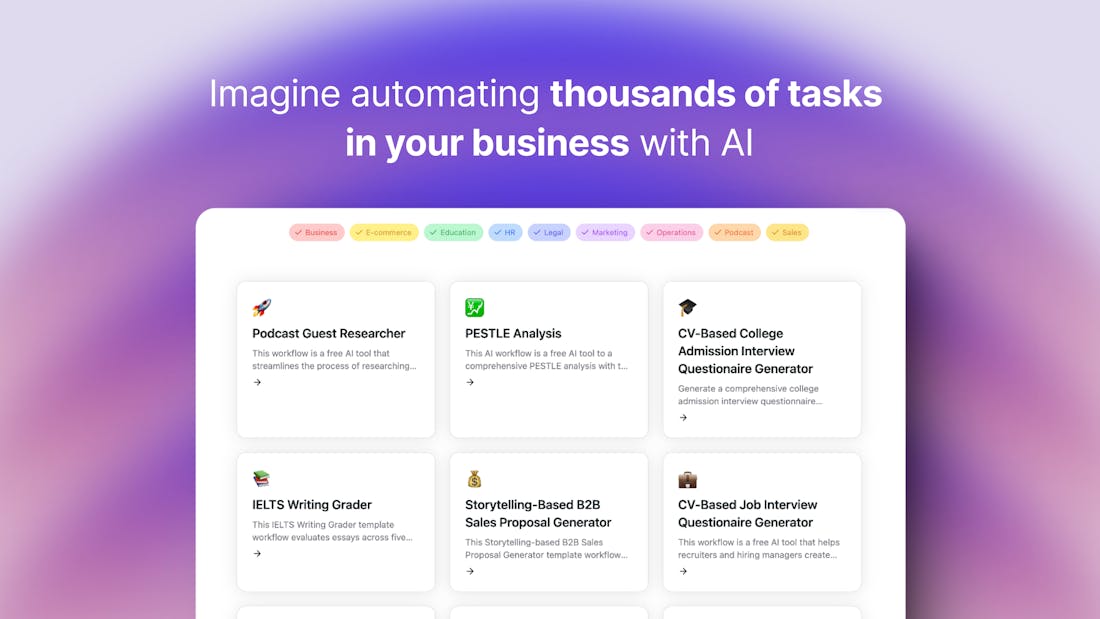

MindPal - Build any internal AI tool in 5 minutes

Deep Nostalgia AI - Turn old photos into videos. Bring images to life.

Check your essay with Essay AI

Dataline - Chat with your data - AI data analysis and visualization

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.

OR