- Superpower Daily

- Posts

- OpenAI Has Begun Training a New Flagship A.I. Model

OpenAI Has Begun Training a New Flagship A.I. Model

AI models have favorite numbers because they think they’re people

In today’s email:

🎧 AI headphones let the wearer listen to a single person in a crowd, by looking at them just once

🤯 The story of, by far, the weirdest bug I've encountered in my CS career.

👀 Ex-OpenAI Director Says Board Learned of ChatGPT Launch on Twitter

🧰 10 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

OpenAI has announced the training of a new flagship artificial intelligence model to succeed GPT-4, aiming to achieve "artificial general intelligence" (AGI), a machine capable of performing any task a human brain can. This new model will enhance AI products like chatbots, digital assistants, search engines, and image generators. Additionally, OpenAI has established a Safety and Security Committee to address potential risks associated with the new technology, including disinformation, job displacement, and broader existential threats.

The company's recent developments include the release of an updated GPT-4 version, GPT-4o, which can generate images and respond in a highly conversational voice. This advancement led to controversy when actress Scarlett Johansson claimed it mimicked her voice without permission, though OpenAI denied this. Furthermore, OpenAI faces legal challenges, such as a copyright infringement lawsuit from The New York Times over the use of its content in AI training.

OpenAI's co-founder Ilya Sutskever, who was leading safety efforts, recently left the company, raising concerns about the handling of AI risks. His departure, along with the resignation of Jan Leike, who co-ran the Superalignment team with Sutskever, casts doubt on the future of OpenAI's long-term safety research. John Schulman, another co-founder, will now lead the safety research, with the new committee overseeing and guiding the company's approach to mitigating technological risks.

Jan Leike, a prominent AI researcher who recently resigned from OpenAI and publicly criticized its approach to AI safety, has joined Anthropic to lead a new "superalignment" team. In a post on X, Leike stated that his team will focus on various aspects of AI safety and security, including scalable oversight, weak-to-strong generalization, and automated alignment research.

A source familiar with the matter told TechCrunch that Leike will report directly to Anthropic's chief science officer, Jared Kaplan. Current Anthropic researchers working on scalable oversight will be restructured to report to Leike as his new team begins operations. This restructuring aims to enhance techniques for controlling large-scale AI behavior in predictable and desirable ways.

Leike's team at Anthropic mirrors the mission of OpenAI’s recently dissolved Superalignment team, which he co-led. The Superalignment team at OpenAI aimed to solve core technical challenges of controlling superintelligent AI within four years but faced constraints due to OpenAI's leadership. Anthropic, led by CEO Dario Amodei, has positioned itself as more safety-focused compared to OpenAI. Amodei, who split from OpenAI over disagreements regarding its commercial focus, founded Anthropic with several former OpenAI employees, including former policy lead Jack Clark.

AI models often exhibit human-like behavior in their responses, a phenomenon highlighted by a recent experiment where major language models were asked to pick a random number between 0 and 100. The results showed that these models have "favorite" numbers, much like humans do. For example, OpenAI’s GPT-3.5 Turbo frequently chooses 47, Anthropic’s Claude 3 Haiku prefers 42, and Gemini often picks 72. Despite their sophisticated algorithms, these models demonstrated human-like biases by avoiding low and high numbers and rarely selecting double digits or round numbers, showcasing a peculiar predictability.

This behavior arises because AI models generate responses based on patterns found in their training data, not because they understand randomness. Humans, when asked to predict or choose random numbers, often fall into predictable patterns, avoiding certain numbers and favoring others that seem more random. Similarly, AI models, trained on vast amounts of human-produced content, replicate these patterns, selecting numbers that appear frequently in the data they’ve processed. This leads to the impression that the models are making human-like choices, even though they lack any actual understanding or reasoning abilities.

The experiment underscores the importance of recognizing that AI models are sophisticated pattern mimics, not independent thinkers. They generate outputs based on statistical likelihoods derived from their training data, imitating human responses without comprehension. This can be seen in various tasks, from picking random numbers to providing recipe suggestions. The apparent human-like behavior of these models is a reflection of their training on human data, emphasizing the need for critical awareness when interacting with AI systems.

Helen Toner, a former OpenAI board member, revealed on The TED AI Show podcast that the board was unaware of ChatGPT's launch in 2022 until they saw it on Twitter. Toner discussed the series of events that led to the firing of CEO Sam Altman in November last year. She mentioned that the board was not informed in advance about the chatbot's release, which later became a significant success. In response, Bret Taylor, the current board chief, expressed disappointment that Toner continues to revisit these issues and emphasized that an independent review found no concerns related to product safety, security, or company finances.

Toner also criticized Altman's leadership, stating that he did not disclose his involvement with OpenAI’s startup fund and often provided inaccurate information about the company’s safety processes. This lack of transparency made it difficult for the board to assess the effectiveness of safety measures. Due to these issues, Toner and other directors concluded they could no longer trust Altman’s information. Following Altman's firing, a significant portion of the company's employees demanded his reinstatement, which eventually led to the board members, including Toner, leaving the company.

In a recent article in the Economist, Toner and another former director, Tasha McCauley, argued that OpenAI was not capable of self-regulation and called for government intervention to ensure the safe development of powerful AI. They believe that external regulation is necessary to manage the risks associated with AI technology and ensure its benefits are shared by all of humanity.

Other stuff

Microsoft, Beihang release MoRA, an efficient LLM fine-tuning technique

AI headphones let the wearer listen to a single person in a crowd, by looking at them just once

The story of, by far, the weirdest bug I've encountered in my CS career.

After Pegasus Was Blacklisted, Its Ceo Swore Off Spyware. Now He’s the King of Israeli AI.

What We Learned from a Year of Building with LLMs

Build an agent that can apply to jobs with a PNG of your resume using open-source frameworks!

I built a GPT model in 10 hours (Video)

Facebook will soon use your photos, posts, and other info to train its AI. You can opt-out (but it's complicated)

Google adds AI-powered features to Chromebook

How does AI impact my job as a programmer?

'Overlooked' data workers who train AI speak out about harsh conditions

Superpower ChatGPT now supports voice 🎉

Text-to-Speech and Speech-to-Text. Easily have a conversation with ChatGPT on your computer

LLMWhisperer - Get complex documents ready for LLM consumption. Try for free

vocalized.dev - Split test real-time conversation with different voice AI providers.

AI CSS Animations - Make complex animations with your voice and AI

Map Scrapper - Get Local Leads with the power of AI

Zen Quiz - Turn your notes into quizzes

Frontly - Build AI-powered SaaS apps and internal tools with no code

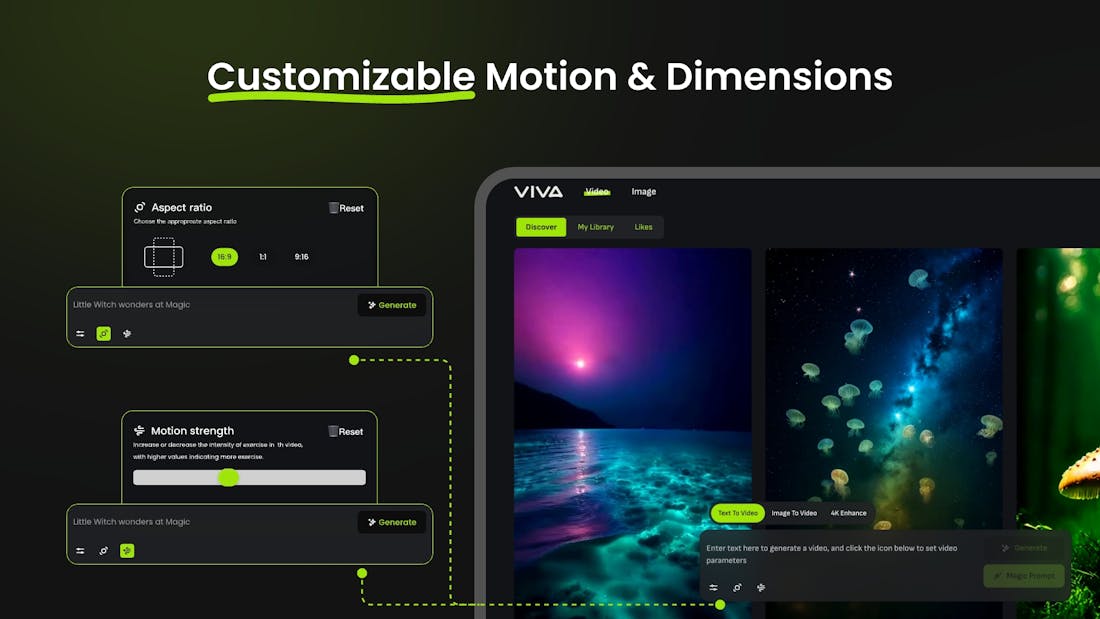

VIVA - AI-powered creative visual design platform

Lawformer AI - Turn your document databases into AI-powered libraries

Tonic Textual - Get your unstructured data AI-ready in minutes

Squire AI - AI code reviews & PR descriptions

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.