- Superpower Daily

- Posts

- 2 months old, $2B valuation

2 months old, $2B valuation

AI Starts to Sift Through String Theory’s Possibilities

In today’s email:

🍎 Apple releases open-source AI models designed to run on-device

🐇 Rabbit R1: a fun, funky, unfinished AI gadget

👀 Meta’s A.I. Assistant Is Fun to Use, but It Can’t Be Trusted

🧰 10 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

Cognition Labs, an AI startup, has reached a significant milestone with a new valuation of $2 billion after securing $175 million in recent funding led by Peter Thiel’s Founders Fund. This substantial valuation increase from $350 million in January reflects the strong investor confidence in the startup's potential. Founded in November 2023, Cognition Labs made headlines with its AI software engineer named Devin, which has sparked interest among technology enthusiasts for its ability to autonomously write code and fine-tune AI models.

Devin was launched in March and has since undergone several evaluations by top tech companies, showing impressive capabilities in autonomous coding and resolving issues with a 13.86% accuracy, a significant improvement over previous models. This breakthrough has contributed immensely to the startup's rapid valuation increase, demonstrating the growing market interest in AI tools that can automate complex software development tasks.

Despite its young age, Cognition Labs is positioning itself alongside major AI entities like French company Mistral and Perplexity AI, both valued in the billions. The recent funding will likely fuel further advancements in Cognition Labs’ product development, enhancing their AI capabilities and expanding their market reach. This scenario underscores a dynamic shift in the AI sector where innovative solutions like Devin are rapidly transforming expectations and investment landscapes.

String theory, a field that has fascinated physicists for decades due to its elegant simplicity and promise as a "theory of everything," posits that the fundamental constituents of the universe are one-dimensional strings whose vibrations determine the particles and forces of nature. Recently, advancements have been made in understanding how these strings could theoretically lead up to the known universe, thanks to neural networks. These AI systems have enabled researchers to explore how different configurations of six extra, tiny dimensions—beyond our perceivable four—shape the physical laws and particles we observe.

In practical terms, these dimensions are theorized to be compactified in complex shapes known as Calabi-Yau manifolds, which could potentially dictate the types of particles and forces in our universe through their unique geometric properties. Recent efforts using machine learning have focused on calculating these geometries more accurately, allowing physicists to predict the types of macroscopic worlds that might emerge from specific microscopic string configurations. This marks a significant step in applying string theory to derive real-world particle physics, though it has yet to conclusively describe our own universe.

Despite these promising developments, the field of string theory faces enormous challenges, primarily due to the vast number of possible configurations (estimated to be around \(10^{500}\)) and the current inability to precisely predict which might correspond to our universe. Researchers remain optimistic, utilizing neural networks to explore these possibilities more efficiently, but the practical application of string theory in predicting new, verifiable physical phenomena remains a significant and unresolved challenge. The overarching hope is to find a model within string theory that not only aligns with the known universe but also offers predictions that can be experimentally confirmed, thus fulfilling the theory's original promise.

Snowflake has introduced a new large language model named Arctic, optimized for complex enterprise tasks such as SQL and code generation, as well as instruction following. The Arctic utilizes a 'mixture-of-experts' (MoE) architecture, where the model's 480 billion parameters are divided into 128 expert subgroups, allowing it to efficiently manage specific tasks with just 17 billion active parameters at any given time. This architecture enables Arctic to deliver competitive performance across various benchmarks, outperforming some models from Databricks and nearly matching others from Llama 3 and Mixtral in areas such as SQL generation and coding tasks.

Arctic has been designed to serve the enterprise market, focusing on creating generative AI applications that can simplify and enhance business operations. The model has shown promising results in several benchmarks, scoring 65% on average in enterprise-related tasks. It performed exceptionally well in the Spider benchmark for SQL generation with a 79% score and exhibited solid results in coding and instruction-following tasks. Arctic's efficiency is highlighted by its low compute consumption, which is significantly less than that of its competitors, enabling cost-effective operations.

The model is available under an Apache 2.0 license, ensuring broad accessibility for personal, commercial, or research purposes. Snowflake offers Arctic through its LLM app development service, Cortex, and across various model gardens and catalogs such as Hugging Face and Microsoft Azure. Alongside the model, Snowflake is also providing a data recipe for efficient fine-tuning and a research cookbook that offers insights into the MoE architecture, aiming to assist enterprises and developers in leveraging AI technologies more effectively.

Apple has recently launched OpenELM, an open-source project featuring small language models intended for on-device use, avoiding the need for cloud connectivity. This initiative includes eight models with varying capacities from 270 million to 3 billion parameters, aimed at enhancing the efficiency of text generation. These models are designed for various devices, including laptops and smartphones, and are available on Hugging Face for public use.

The OpenELM models have been developed by a team led by Sachin Mehta, alongside Mohammad Rastegari and Peter Zatloukal. Apple's effort marks a shift towards transparency in their traditionally closed approach, emphasizing community empowerment and future research facilitation. The models are pre-trained on a vast dataset of 1.8 trillion tokens from sources like Reddit and Wikipedia, optimized for parameter-efficient performance using a layer-wise scaling strategy.

In performance terms, the OpenELM models show promising results, particularly the 450 million parameter model which surpasses the OLMo model in accuracy. However, they are not at the cutting edge of the field, especially when compared to Microsoft’s Phi-3 Mini. The community has responded positively to Apple's open-source approach, with expectations for the models' ongoing improvement and broad application across different platforms.

Other stuff

A morning with the Rabbit R1: a fun, funky, unfinished AI gadget

Why the AI Industry’s Thirst for New Data Centers Can’t Be Satisfied

Meta’s A.I. Assistant Is Fun to Use, but It Can’t Be Trusted

Cohere open-sourced their chat interface.

Superpower ChatGPT now supports voice 🎉

Text-to-Speech and Speech-to-Text. Easily have a conversation with ChatGPT on your computer

Augment - The best software comes from Augmenting developers – not replacing them.

Nooks AI - Sales superpowers on speed dial

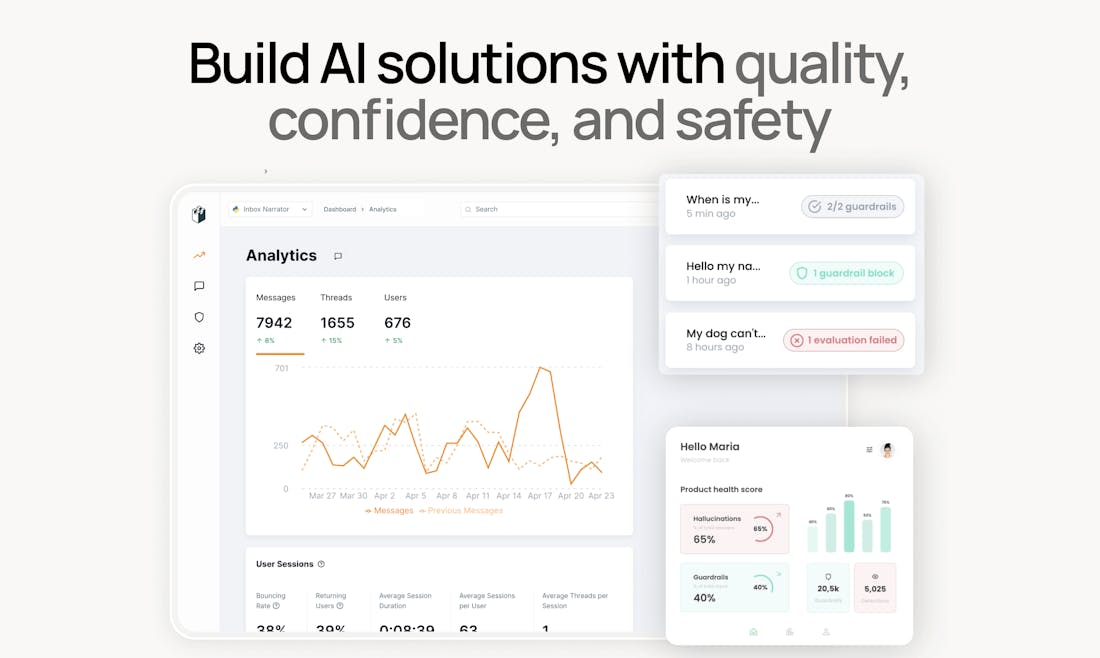

LangWatch - Understand, measure, and improve your LLMs

SecBrain AI - Use AI to save and remember everything

bentolingo - Your daily AI language bento box

MarketerGrad by Pangea - Use AI to instantly match with top fractional marketers

Dart - The ultimate AI project management tool

Candle - Chat with your money

Inboxly AI - Your AI-powered public mailbox to be limitlessly reachable

Stainless - Generate best-in-class SDKs.

Enhance Your Workflow with Superpower ChatGPT Pro: Introducing the Right-Click Menu Feature!

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.