- Superpower Daily

- Posts

- AI outperforms humans in most benchmarks

AI outperforms humans in most benchmarks

Will Zuck open source the $10 billion model?

In today’s email:

🔥 Sora was used to show what will TED look like in 40 years

🍔 ‘Eat the future, pay with your face’: my dystopian trip to an AI burger joint

☠️ OpenAI's GPT-4 can exploit real vulnerabilities

🧰 9 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

The 2024 AI Index report from Stanford University’s Institute for Human-Centered Artificial Intelligence (HAI) details the rapid advancements AI has made, surpassing human abilities in several benchmarks like image classification, reading comprehension, and natural language inference. The report highlights the need for new, more challenging benchmarks as AI has not only met but exceeded the old ones, which are now seen as obsolete. It also notes AI's impressive improvements in handling complex tasks such as competition-level math problems and visual commonsense reasoning.

Despite these advancements, AI still shows significant limitations, particularly in generating reliable content without 'hallucinations'—a term for presenting false information as facts. This issue was notably demonstrated when a lawyer faced a fine for submitting AI-generated legal documents without verification. The AI Index also evaluates the truthfulness of AI, using benchmarks like TruthfulQA, with newer models like GPT-4 showing substantial improvement in providing truthful answers.

The report also delves into AI-generated images, examining the progression in text-to-image technology with platforms like Midjourney and assessing models using the Holistic Evaluation of Text-to-Image Models (HEIM). While no single model excels at all criteria, certain models have achieved notable success in specific aspects such as image quality and aesthetic appeal. The ongoing evolution of AI technology promises even more radical changes in the future, posing challenges and opportunities in equal measure.

We explain the latest business, finance, and tech news with visuals and data. 📊

All in one free newsletter that takes < 5 minutes to read. 🗞

Save time and become more informed today.👇

Mark Zuckerberg - Llama 3, $10B Models, Caesar Augustus, & 1 GW Datacenters

Mark Zuckerberg discusses the evolution and future plans for Meta's AI development on a podcast. He highlights the launch of Meta AI's new models, particularly Llama-3, which is being rolled out as open source and is set to enhance Meta's AI capabilities significantly. Zuckerberg emphasizes the potential of AI in various applications, from real-time image generation to enhancing Meta's platforms like Facebook and Messenger with smarter, more responsive AI features.

Zuckerberg talks about the strategic considerations behind Meta's AI development, including the extensive use of H100 GPUs to bolster capabilities for projects like Reels and other AI-driven recommendations. This strategic investment aims to prepare Meta for future needs and innovations, reflecting Zuckerberg's broader vision of continuously pushing technological boundaries to remain at the forefront of AI advancements.

On the podcast, Zuckerberg also reflects on his personal motivations and the philosophical approach guiding his leadership at Meta. He discusses the importance of open-source development in fostering a competitive yet cooperative technological ecosystem. Zuckerberg's vision for Meta emphasizes not just technological advancement but also creating a balanced, open framework that supports broad, global innovation in AI and beyond.

Researchers from the University of Illinois Urbana-Champaign have demonstrated that OpenAI's GPT-4 can exploit real-world security vulnerabilities effectively by using CVE (Common Vulnerabilities and Exposures) advisories. Their study showed that GPT-4 could autonomously exploit 87% of a set of 15 one-day vulnerabilities, which are security flaws that have been disclosed but remain unpatched. This rate was significantly higher compared to other models and open-source vulnerability scanners like ZAP and Metasploit, which showed no capability in these tests.

The paper highlighted that such large language models, when combined with automation frameworks like ReAct implemented in LangChain, can perform exploits more efficiently and at a lower cost than traditional methods. Daniel Kang, an assistant professor at UIUC and co-author of the study, noted that the LLM agent's effectiveness significantly dropped to 7% when it was denied access to the CVE descriptions, indicating the critical role of detailed vulnerability data in enabling such exploits.

Kang advocates against limiting public access to security information as a defense against LLM-driven attacks, suggesting that transparency is essential for robust cybersecurity. The study aims to prompt proactive security measures like regular updates and patches to fend off potential threats posed by automated systems. The researchers have kept the exact prompts used by GPT-4 confidential, as requested by OpenAI, but they are available upon request for those interested in further details.

Other stuff

OpenAI's unreleased Sora was used to show what will TED look like in 40 years

Los Angeles is using AI in a pilot program to try to predict homelessness and allocate aid

Employers and job candidates are dueling with AI in the hiring process

‘Eat the future, pay with your face’: my dystopian trip to an AI burger joint

OpenAI winds down Dall-E 2

Superpower ChatGPT now supports voice 🎉

Text-to-Speech and Speech-to-Text. Easily have a conversation with ChatGPT on your computer

Meta's Segment Anything Model (SAM) can now run in your browser w/ WebGPU

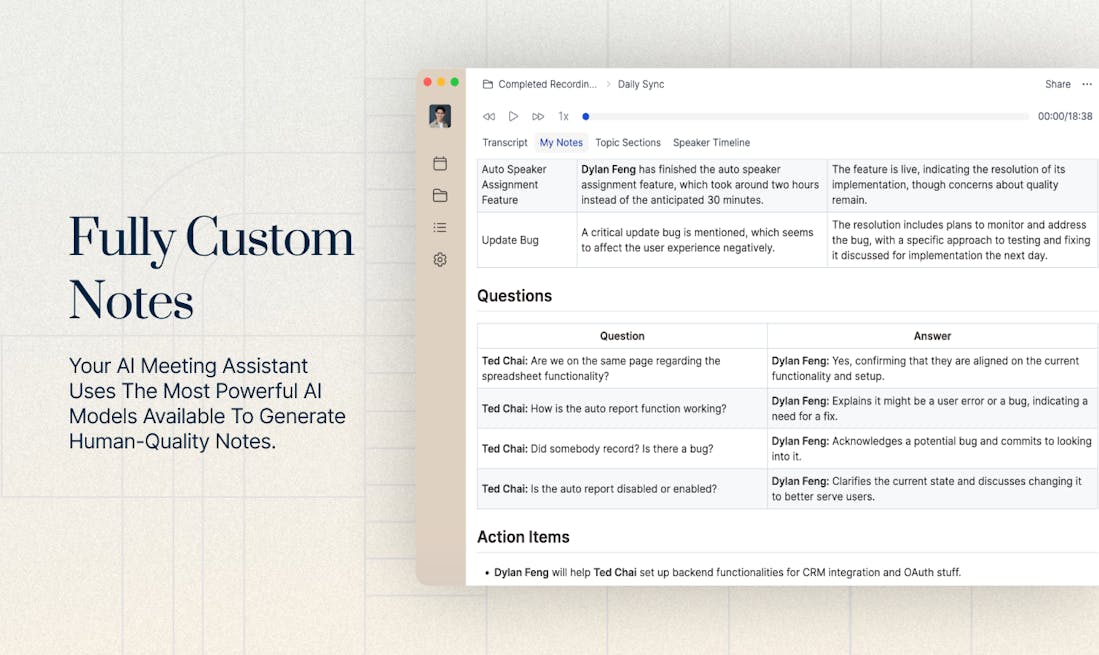

Sonnet - Meeting notes and CRM, automated

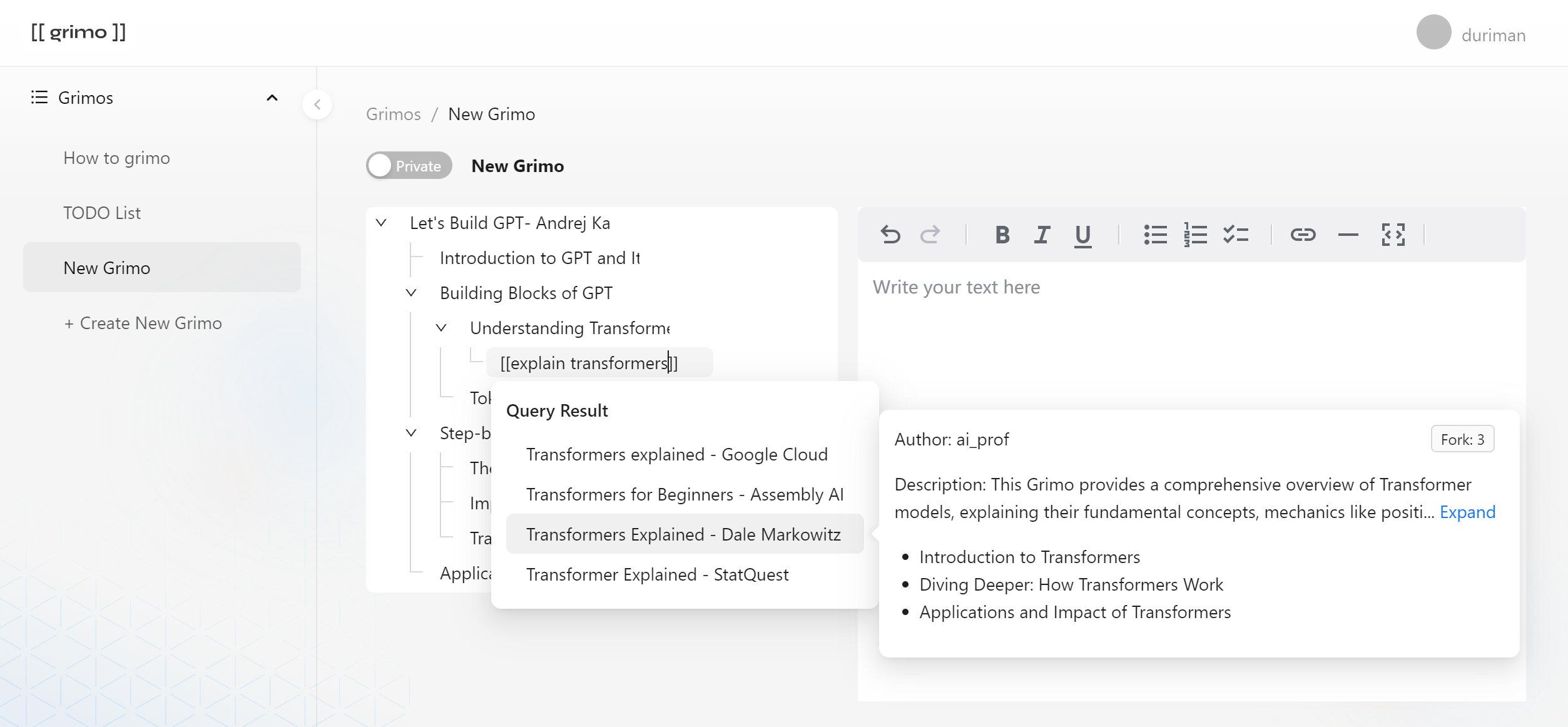

Grimo AI (Alpha) - Where Obsidian meets GitHub & Quora

Minard - natural language data visualization

Open Agent Studio - Build no-code agents to target markets untouched by AI

AI Flash.Cards - Create personalized flashcards from any text or pdf file

AiApply Website Builder - Build a microsite/link in bio in minutes - free

Parny - Social media-like AI incident management tool

Skyla - Custom ChatGPT for Shopify

Maximizing Productivity in ChatGPT: Mastering Advanced Folder Feature in Superpower ChatGPT – Part 1

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.