- Superpower Daily

- Posts

- OpenAI moves away from safety

OpenAI moves away from safety

Slack Trains Its AI-Powered Features on User Messages, Files

In today’s email:

🤯 With AI startups booming, nap pods and Silicon Valley hustle culture are back

🗣️ What Do You Do When A.I. Takes Your Voice?

👀 How AI turned a Ukrainian YouTuber into a Russian

🧰 10 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

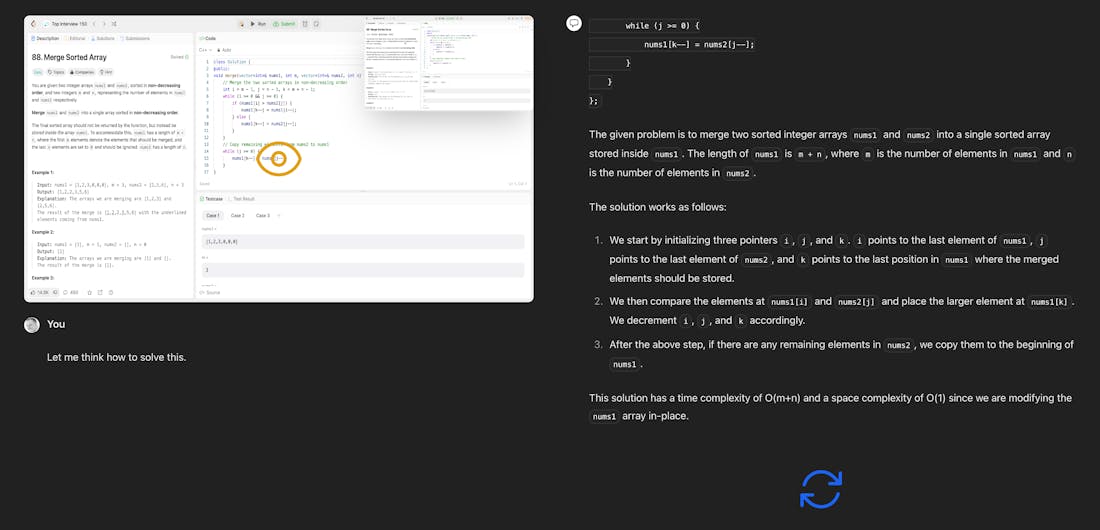

OpenAI has disbanded its Superalignment team, which was established in July 2023 to address AI risks such as rogue behavior, following the resignation of its co-leaders, Ilya Sutskever and Jan Leike. Sutskever, who played a role in the ousting and subsequent reinstatement of CEO Sam Altman, announced his departure, expressing confidence in OpenAI's ability to develop safe AGI under current leadership. He hinted at a new personal project but did not provide details. Leike cited long-standing disagreements about the company's priorities, criticizing OpenAI for prioritizing "shiny products" over safety.

The dissolution of the Superalignment team comes in the same week OpenAI launched GPT-4o, its most human-like AI yet. The team aimed to tackle superintelligence alignment challenges within four years, using a significant portion of OpenAI's computing resources. However, Leike revealed that the team struggled to secure adequate compute for their research and felt their mission was being undermined by the company's focus on product releases.

Despite the disbandment, some former team members have been reassigned within the company. The Superalignment team was tasked with addressing a range of risks including misuse, economic disruption, disinformation, and bias. OpenAI has not publicly commented on the dissolution or the future direction of their AI safety efforts.

Slack is training its machine learning features on user data, including messages, files, and uploads, with all users being opted-in by default. This has sparked frustration among users who were not given advance notice or the chance to opt-out initially. Slack uses this data to improve features like channel recommendations, search results, autocomplete, and emoji suggestions. However, the company assures that it is not using this data for its paid generative AI tools and allows workspace administrators to request opting out of the training dataset via email.

Corey Quinn, an executive at DuckBill Group, voiced his frustration on social media, highlighting the lack of clear communication from Slack regarding this policy. This sentiment was echoed by others, including lawyer Elizabeth Wharton, who criticized Slack for not providing individual users with an easier way to opt-out. Users argued that the company should have adopted an opt-in approach to give them more control over their data privacy.

Slack's response reiterated its stance, explaining that while user data is used to train some machine learning models, it is not used to train generative AI tools. The company maintains that its models do not memorize or reproduce customer data. Despite this, Slack's AI page claims, "Your data is your data. We don't use it to train Slack AI," which has caused confusion. Similar to Slack, other platforms like Squarespace also opt users into AI tool training by default, requiring manual opt-out through settings.

When Jeffrey Wang, co-founder of AI research startup Exa Labs, posted on X about ordering affordable office nap pods, he didn't expect the overwhelming response. The post went viral, revealing a demand for over 100 units when Wang initially intended to order just two. This sparked a conversation about the practicality and hygiene of nap pods, with mixed reactions from the online community. Some applauded the concept for productivity, while others questioned the necessity of sleeping at work instead of home, viewing it as a red flag for work-life balance.

Exa Labs, which recently moved out of a hacker house where its team worked and lived together, reflects the resurgence of Silicon Valley's hustle culture, particularly in San Francisco's Cerebral Valley. Wang emphasized that the nap pods support employees' need for rest, rather than promoting a culture of overwork. He acknowledged that startup life demands high commitment and intense effort, comparing it to grueling academic experiences but on a more challenging level. The company's success, marked by its Y Combinator graduation and growing customer base, relies on this all-in mentality.

Despite the return of hustle culture, there comes a point when startups must balance productivity with reasonable work expectations. As companies grow and more employment laws apply, fostering a sustainable work environment becomes crucial. Wang assured that cleanliness in nap pods wouldn't be an issue, citing an abundance of clean sheets from a recent company event. Ultimately, while the hustle culture may drive initial growth, sustainable practices are essential for long-term success and employee well-being.

Stephen Wolfram, known for his contributions to computational science and as the creator of Mathematica and WolframAlpha, shares his perspectives on AI's potential and challenges in a conversation with Reason's Katherine Mangu-Ward. Wolfram highlights that while AI excels in tasks designed by humans, such as language processing, it struggles with predicting complex scientific phenomena due to computational irreducibility. He believes AI can enhance human tasks by providing a linguistic user interface, making interactions between different systems more efficient. However, Wolfram stresses that AI’s capability to generate content effortlessly challenges traditional notions of academic work and creativity.

Wolfram discusses the implications of AI in various sectors, including education and government. He envisions AI tutors personalized to individual interests and a potential "promptocracy" model where AI processes public opinions to inform governance. Wolfram acknowledges the risk of AI amplifying human biases but notes that its utility lies in its ability to reflect human desires. He advocates for a balance where AI suggestions are considered critically, rather than followed blindly, to avoid stifling innovation and creativity.

Regarding the future of AI, Wolfram warns against over-regulation, which could limit AI's potential benefits. He draws parallels between the evolution of AI and human society, emphasizing the need for new political and ethical frameworks to address AI’s growing role. Wolfram sees competition among AI developers as a stabilizing force, preventing monopolies and encouraging diverse approaches. He concludes that while AI presents unique challenges, it also offers unprecedented opportunities to elevate human capabilities and societal functions.

Other stuff

Facebook Parent’s Plan to Win AI Race: Give Its Tech Away Free

What Do You Do When A.I. Takes Your Voice?

It’s Time to Believe the AI Hype

AI's Cozy Crony Capitalism

Microsoft set to unveil its vision for AI PCs at Build developer conference

Google, OpenAI, and Meta Could Revolutionize Smart Glasses

In regards to recent stuff about how OpenAI handles equity.

AI Is Taking Over Accounting Jobs As People Leave The Profession

International Scientific Report on the Safety of Advanced AI

How AI turned a Ukrainian YouTuber into a Russian

Superpower ChatGPT now supports voice 🎉

Text-to-Speech and Speech-to-Text. Easily have a conversation with ChatGPT on your computer

Funcburger is a collection of simple functions you can stack together to create automation workflows.

Tapmention - Engage Every Reddit Mention. Capture Every Lead.

Narafy - An AI notes app centered around tags

Magicam - Real-time face swapping for any stream or meeting

User Evaluation AI - AI agent that conducts your user interviews

ANDRE - Automated survey data analysis & reporting

Buffup.AI - The AI assistant that knows what you need by GPT-4o

Interviews Chat - Your personal interview prep & copilot

Hoop.dev for Databases - AI-powered database client built for teams

KardsAI - Study smarter, not harder!

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.