- Superpower Daily

- Posts

- Sam Altman is back on the OpenAI board

Sam Altman is back on the OpenAI board

Read this before you use OpenAI for Hiring

In today’s email:

🤯 LLMs surpass human experts in predicting neuroscience results

👀 Marques (MKBHD) interviews the OpenAI team about Sora

🪆 Welcome to the Valley of the Creepy AI Dolls

🧰 10 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

OpenAI today announced that Altman will be rejoining the company’s board of directors several months after losing his seat and being pushed out as OpenAI’s CEO. Joining him are three new members: former CEO of the Bill and Melinda Gates Foundation Sue Desmond-Hellmann, ex-Sony Entertainment president Nicole Seligman, and Instacart CEO Fidji Simo — bringing OpenAI’s board to eight people.

The members of the transitionary board — the board formed after Altman’s firing in November — won’t be stepping down with the appointment of Desmond-Hellmann, Seligman and Simo. Salesforce co-CEO Bret Taylor (OpenAI’s current board chair), Quora CEO Adam D’Angelo and Larry Summers, the economist and former Harvard president, will remain in their roles on the board, as will Dee Templeton, a Microsoft-appointed board observer.

OpenAI has made efforts to move past the controversy by adding new board members to enhance diversity and implementing changes to improve governance. However, the company still faces scrutiny, including an ongoing SEC investigation and broader concerns about the impact of generative AI on society and the technology industry.

The incident has stirred discussions about transparency, leadership, and the role of AI companies in navigating ethical and societal challenges. While OpenAI aims to focus on its mission of advancing AI for the benefit of humanity, the fallout from Altman's ouster and the subsequent developments continue to spark debate about the future direction of the company and its leadership.

Recently, a conflict has arisen between Midjourney (MJ) and Stability AI, the developer of Stable Diffusion, over allegations of image theft. According to a tweet by AI enthusiast Nick St. Pierre, Stability AI employees are accused of stealing prompt and image pairs from Midjourney's database, leading to a 24-hour service outage. In retaliation, Midjourney has banned all employees of Stability AI from using its services.

In the aftermath of the incident, both David Holz, CEO of Midjourney, and Emad Mostaque, CEO of Stability AI, commented on the situation. Holz confirmed the theft and stated that his team was gathering more information, while Mostaque denied any involvement in the theft and offered to assist with the investigation. This interaction suggests that the CEOs are attempting to handle the situation amicably.

The irony of the situation has not gone unnoticed, as both companies are in the business of creating image-generating AI. The controversy continues to unfold, with limited details about who exactly is responsible for the theft or whether Stability AI had any role in directing the action. The tech community is closely watching how this dispute between two prominent AI image-generation companies will be resolved.

A recent study led by Xiaoliang Luo demonstrates that large language models (LLMs) can predict the outcomes of neuroscience experiments with greater accuracy than human experts. Using a 7 billion parameter GPT-3.5 model fine-tuned on neuroscience literature, the team's AI outperformed human predictions across several neuroscience subfields on a benchmark called BrainBench. This advancement suggests that AI could significantly enhance the efficiency and focus of scientific research, particularly in complex fields like neuroscience, by guiding researchers toward more promising experimental avenues.

The findings reveal that LLMs, when fine-tuned with domain-specific data, can grasp intricate patterns in scientific literature, enabling them to make informed predictions about research outcomes. The use of AI in this context not only has the potential to accelerate scientific discovery by prioritizing high-likelihood experiments but also to optimize resource allocation in research funding and reduce experimental redundancy. While the study focuses on neuroscience, its implications extend to other scientific domains, highlighting AI's role as a complementary tool in the research process.

However, the study's scope and its reliance on abstracts for benchmarking call for further exploration to validate these findings across broader scientific contexts and with more comprehensive data sources. Despite the promising results, AI's role should be viewed as supplementary to human expertise, offering a data-driven perspective to enhance, not replace, the critical and creative decision-making inherent in scientific research. Future directions include expanding AI's application to other fields, improving model capabilities, and ensuring ethical AI use in scientific settings, emphasizing collaboration between AI and human expertise to drive innovation and discovery.

OpenAI has introduced a new generative AI model called Sora, discussed in an interview by Marques Brownlee (MKBHD) with OpenAI's Bill Peebles, Tim Brooks, and Aditya Ramesh. Sora is a video generation tool that blends diffusion models like DALL-E with large language models like GPT, creating photorealistic videos from text prompts. It's versatile in video lengths, aspect ratios, and resolutions, although it's not yet available for public use as it's in the feedback-gathering stage to refine its capabilities and ensure safety.

Sora showcases strengths in photorealism and the generation of longer videos while allowing for stylistic input, but faces challenges with rendering hands, physics, and maintaining consistent camera movements. OpenAI is focusing on gathering feedback to further enhance Sora, contemplating features like improved user controls and the addition of provenance classifiers to distinguish AI-generated content, similar to advancements made with DALL-E.

The potential of Sora excites the OpenAI team, as it opens up new possibilities for media creation and can significantly impact creative industries. However, generating videos with Sora is time-intensive, and the team is mindful of the dual-edged nature of such technology, emphasizing the need for responsible use and the implementation of safety measures to prevent misuse or the spread of misinformation.

Other stuff

Read this before you use OpenAI for Hiring

My attempt at an AI writing assistant for Chinese

Matrix multiplication breakthrough could lead to faster, more efficient AI models

All Chatbots are biased

Welcome to the Valley of the Creepy AI Dolls

AI Marilyn Monroe Marks Another Step Forward In Extending Celebrity Brand Value Beyond The Grave

Amid explosive demand, America is running out of power

Silicon Valley is pricing academics out of AI research

Pika Labs Introduces Sound Effects for Video Generation

Superpower ChatGPT now supports voice 🎉

Text-to-Speech and Speech-to-Text. Easily have a conversation with ChatGPT on your computer

DraftDeep - AI Legal Drafting Copilot for busy legal professionals.

Duckie - Resolve Engineering Issues Faster

Sieve - Build better AI with multiple models

Super RAG - Super performant RAG pipelines for AI apps

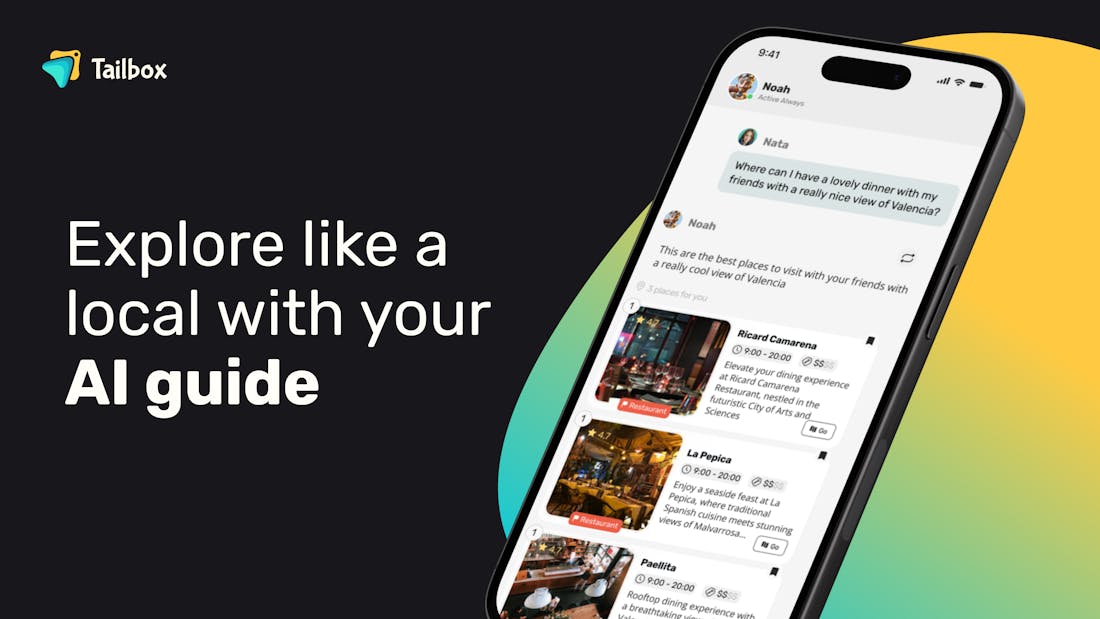

Tailbox - Mix of PokemonGo and Tiktok for travel

Charmed AI - End-to-end tools for 3D video game art

DryMerge - Automate work with plain English

Ocular AI - The future of work is here

DraftAid - From 3D models to production drawings using AI

LlamaGym - Fine-tune LLM agents with online reinforcement learning

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.