- Superpower Daily

- Posts

- OpenAI introduces Sora, its text-to-video AI model 🤯

OpenAI introduces Sora, its text-to-video AI model 🤯

Google released Gemini 1.5 Pro

In today’s email:

👀 Meta’s new AI model learns by watching videos

🤔 Sam Altman Thinks The Current ChatGPT Is Akin To A “Barely Useful Cellphone”

🧑💻 Apple Readies AI Tool to Rival Microsoft’s GitHub Copilot

🧰 11 new AI-powered tools and resources. Make sure to check the online version for the full list of tools.

:no_upscale():format(webp)/cdn.vox-cdn.com/uploads/chorus_asset/file/25288007/sora.gif)

OpenAI has unveiled a new video-generation model named Sora, which transforms text prompts into photorealistic videos up to one minute long. Sora stands out for its ability to generate complex scenes featuring multiple characters, specific types of motion, and highly detailed backgrounds. It's designed to understand how objects interact in the physical world, creating videos that include compelling characters and vibrant emotions. This innovation marks a significant leap forward from previous text-to-image models, showcasing OpenAI's commitment to pushing the boundaries of AI-generated content.

Despite its advanced capabilities, Sora is not without its challenges, notably in simulating the physics of complex scenes and interpreting cause and effect accurately. OpenAI's introductory materials highlight the model's potential quirks, such as occasional unrealistic movements within a scene. Nevertheless, the examples provided, including an aerial view of California during the gold rush and a perspective from inside a Tokyo train, demonstrate Sora's impressive ability to create immersive and imaginative videos.

As of now, Sora is in a limited-release phase, accessible only to selected testers and creators for evaluation and feedback purposes. This cautious approach reflects OpenAI's awareness of the potential risks and ethical considerations associated with generating photorealistic AI videos. In light of concerns over the misuse of AI-generated content, OpenAI continues to explore safeguards, such as watermarking, to mitigate the impact of fake, AI-generated imagery on public perception and trust.

We explain the latest business, finance, and tech news with visuals and data. 📊

All in one free newsletter that takes < 5 minutes to read. 🗞

Save time and become more informed today.👇

Meta's AI researchers have developed a new model called Video Joint Embedding Predictive Architecture (V-JEPA), designed to learn from video rather than written words. Inspired by the technique used to train large language models (LLMs) with masked sentences, V-JEPA applies a similar approach to video footage, masking parts of the screen to learn about the world through visual context. This model, which is not generative, aims to build an internal model of the world, excelling at understanding complex interactions between objects. Yann LeCun, leading Meta’s FAIR group, emphasizes the goal of creating machine intelligence that learns and adapts like humans, forming internal models to efficiently complete tasks.

The implications of V-JEPA extend beyond Meta, potentially transforming the broader AI ecosystem. It aligns with Meta's vision for augmented reality glasses, providing an AI assistant with a pre-existing audio-visual understanding of the world that can quickly adapt to a user's unique environment. Additionally, V-JEPA could lead to more efficient AI training methods, reducing the time, cost, and ecological impact associated with developing foundation models. This approach could democratize AI development, allowing smaller developers to train more capable models, reflecting Meta's strategy of open-source research.

Meta plans to enhance V-JEPA by integrating audio, adding another layer of data for the model to learn from, akin to a child's learning progression from watching muted television to understanding the context with sound. The company aims to release V-JEPA under a Creative Commons noncommercial license, encouraging researchers to explore and expand its capabilities, potentially accelerating progress towards artificial general intelligence by enabling models to learn from both sights and sounds.

Google recently announced the launch of Gemini 1.5, the next generation in their series of AI models, showcasing significant advancements in performance and efficiency. Developed with safety and innovation at its core, Gemini 1.5, including the mid-size multimodal model 1.5 Pro, offers dramatic improvements in processing capabilities, boasting a context window of up to 1 million tokens. This enhancement enables the model to process and understand large volumes of information across various modalities, making it more useful for developers and enterprise customers. The introduction of a Mixture-of-Experts architecture further increases its training and serving efficiency.

Gemini 1.5 Pro's ability to handle extensive data sets allows it to perform complex reasoning tasks, analyze and summarize vast amounts of content, and engage in more relevant problem-solving across different modalities, including text, code, images, audio, and video. This capability is showcased through its ability to process and reason about content such as hour-long videos, large codebases, or detailed transcripts from historical events. With a focus on enhancing user experience and computational efficiency, Google plans to roll out full access to the 1 million token context window while working on optimizations to reduce latency and computational requirements.

To ensure responsible deployment, Gemini 1.5 has undergone extensive ethics and safety testing, aligning with Google's AI Principles. The model's development emphasizes continuous improvement in AI systems through rigorous testing for potential harms and the integration of safety measures into the model's governance processes. Google is offering a limited preview of Gemini 1.5 Pro to developers and enterprise customers, with plans to introduce pricing tiers and further improve the model's capabilities. This step marks a significant advancement in AI, opening new possibilities for creating, discovering, and building using AI technologies responsibly and efficiently.

Other stuff

Sam Altman Thinks The Current ChatGPT Is Akin To A “Barely Useful Cellphone”

I’ve gotten 100 free McDonald’s meals — thanks to this ChatGPT hack

Sam Altman owns OpenAI's venture capital fund

The biggest Collection of Colab-Based LLMs Fine-tuning Notebooks

Largest text-to-speech AI model yet shows ’emergent abilities’

Magika: AI-powered fast and efficient file type identification

Apple Readies AI Tool to Rival Microsoft’s GitHub Copilot

Superpower ChatGPT now supports voice 🎉

Text-to-Speech and Speech-to-Text. Easily have a conversation with ChatGPT on your computer

Lindy AI - Meet your AI employee.

Diarupt - Human-like AI Teammates

Automate your weekly updates

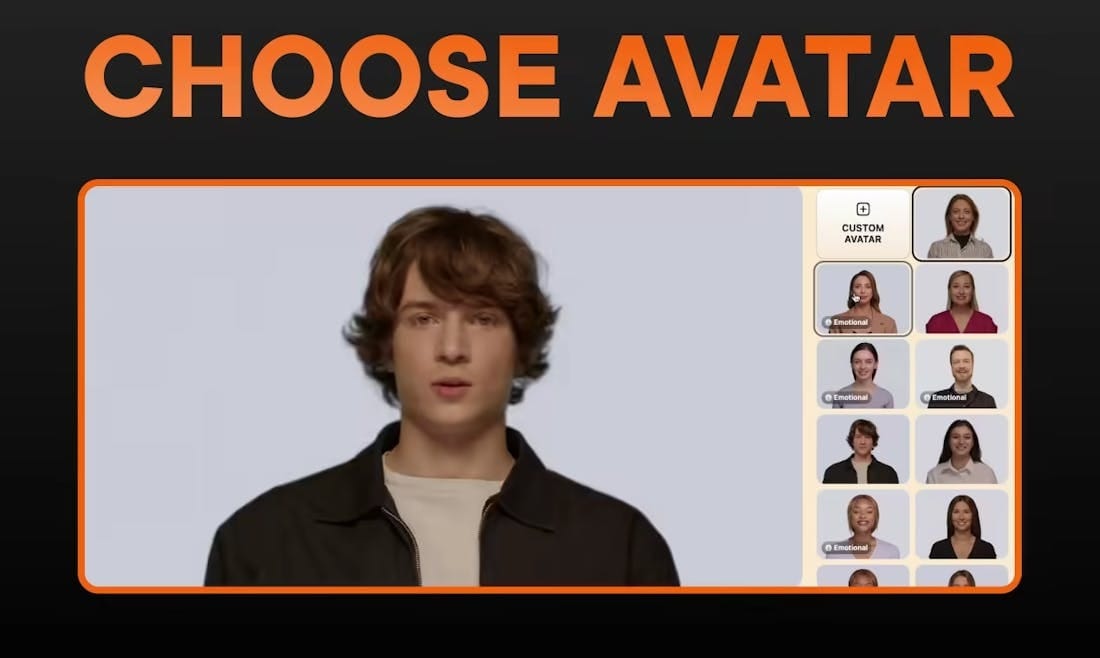

Studio Neiro AI - Scale your marketing videos with AI avatars

Intent by Upflowy - Turn your leads' browsing behavior into AI summaries

ChatAvatar - Text and image to 3D avatar

Squad AI - The product strategy tool for everyone

Guide.com - AI-powered trip planner

LangSmith General Availability - LLM application development, monitoring, and testing

Instant Architectural Analysis

How to create multiple custom instruction profiles on ChatGPT

How did you like today’s newsletter? |

Help share Superpower

⚡️ Be the Highlight of Someone's Day - Think a friend would enjoy this? Go ahead and forward it. They'll thank you for it!

Hope you enjoyed today's newsletter

Did you know you can add Superpower Daily to your RSS feed https://rss.beehiiv.com/feeds/GcFiF2T4I5.xml

⚡️ Join over 200,000 people using the Superpower ChatGPT extension on Chrome and Firefox.